LCMD AI Pod

Lead the way by owning your personal AI supercomputer An AI Performance Beast built for Hardcore Developers 275T computing power, 64GB VRAM, game with CUDA

¥ 16599

LCMD AI Pod

Lead the way by owning your personal AI supercomputer An AI Performance Beast built for Hardcore Developers 275T computing power, 64GB VRAM, game with CUDA

¥ 16599

Supercomputer Supercar Super Cool

It'll be a Joy to Put Anywhere.

Its design cleverly blends the sci-fi brilliance of Star Wars with the whirlwind curves of a supercar. With powerful computing power running at full speed, it will open up an AI sci-fi feast that traverses the starry river for you.

Supercomputer Supercar Super Cool

It'll be a Joy to Put Anywhere.

Its design cleverly blends the sci-fi brilliance of Star Wars with the whirlwind curves of a supercar. With powerful computing power running at full speed, it will open up an AI sci-fi feast that traverses the starry river for you.

Why Choose LCMD AI Pod?

275T Super Computing Power

The NVIDIA 275T delivers veritable computing power and is compatible with the NVIDIA CUDA AI ecosystem, ensuring sufficient computing power. For non-NVIDIA CUDA AI chips, AI performance is only theoretical hardware performance. Due to the lack of support from the CUDA ecosystem, actual AI computing power is discounted by about 50%.

64GB Massive VRAM

Run 70B ~ 671B AI large models at home and have your own personal AI assistant. With 64GB of massive VRAM, it's designed for massive models, effortlessly running text-to-image and text-to-video large models.

Compatible with CUDA Ecosystem

NVIDIA chips offer optimal CUDA support. AI large models and software run seamlessly out-of-the-box once downloaded. Non-CUDA AI chips require months of adaptation after model download just to test. In the AI era, falling behind by a day means falling behind forever.

Get Started with AI in 5 Minutes

Pre-installed with the Ubuntu system, compatible with CUDA acceleration drivers and AI development environments, and combined with the self-developed AI applications of LCMD Microservers, it only takes 5 minutes for beginners to go from unboxing to running the AI model. AI learning has been shortened from several months to just 5 minutes, alleviating anxiety and keeping up with the AI era.

Bring Your AI Lab Home

It offers computing power comparable to small workstations and supports research-level computing. It has been purchased by AI laboratories of many universities (such as Wuhan University, Huazhong University of Science and Technology, Shantou University, Xiamen University, Peking University, etc.) for cutting-edge AI researchAI projects. Value-added tax invoices can be issued and a 3-year technical after-sales service is provided. Apply for educational discounts and come to accelerate your research progress!

Unlock All Your AI Potential with One Device

Full-scenario coverage from model development, application deployment to content creation. AI applications include: web search, RAG search, text-to-image, text-to-video, web summarization, AI input, web screenshot to text, audio/video summarization, web text-to-speech, video to text, etc., all with one-click installation without configuration, grasp AI trends in real-time. Supports popular AI inference frameworks like Ollama, vllm, Shimmy. (Note: The AI software needs to be bunbled purchase with LCMD Microserver).

Say Goodbye to Cloud Queues and Expensive Bills

The bills for cloud GPU devour budgets like a bottomless pit. With a one-time investment in an AI Pod, you gain a private local AI supercomputer. Compared with mainstream AI cloud services, the annual AI subscription fees for over 10 applications easily exceed 20,000 RMB. Calculate your subscription fee and see how much you can save in a year?

Your Data Belongs Only to You

Core code, trade secrets, private datasets...Are you really at ease uploading them to the cloud? Localized deployment eliminates the privacy risks of uploading data to the cloud. Physical isolation meets the strictest data security requirements. Own it now and protect your core digital assets.

Lead the Way Starts with by Owning Your Own Computing Engine

In the era of private AI supercomputing, the moat is your unique industry knowledge and data. Owning a private AI supercomputer lets you validate ideas one step faster than others. Founding edition users have already successfully developed AI applications with the AI Pod. Take action, and join the leaders now!

Money-Making Tools

The AI Pod is specifically designed for AI model inference, with a total power consumption of only 64W. It only takes 4 seconds to generate one image, and 20,000 images can be produced in 24 hours a day, with a daily electricity cost of less than 0.3 RMB. Not only does it save you AI subscription fees, it's also a money-making tool.

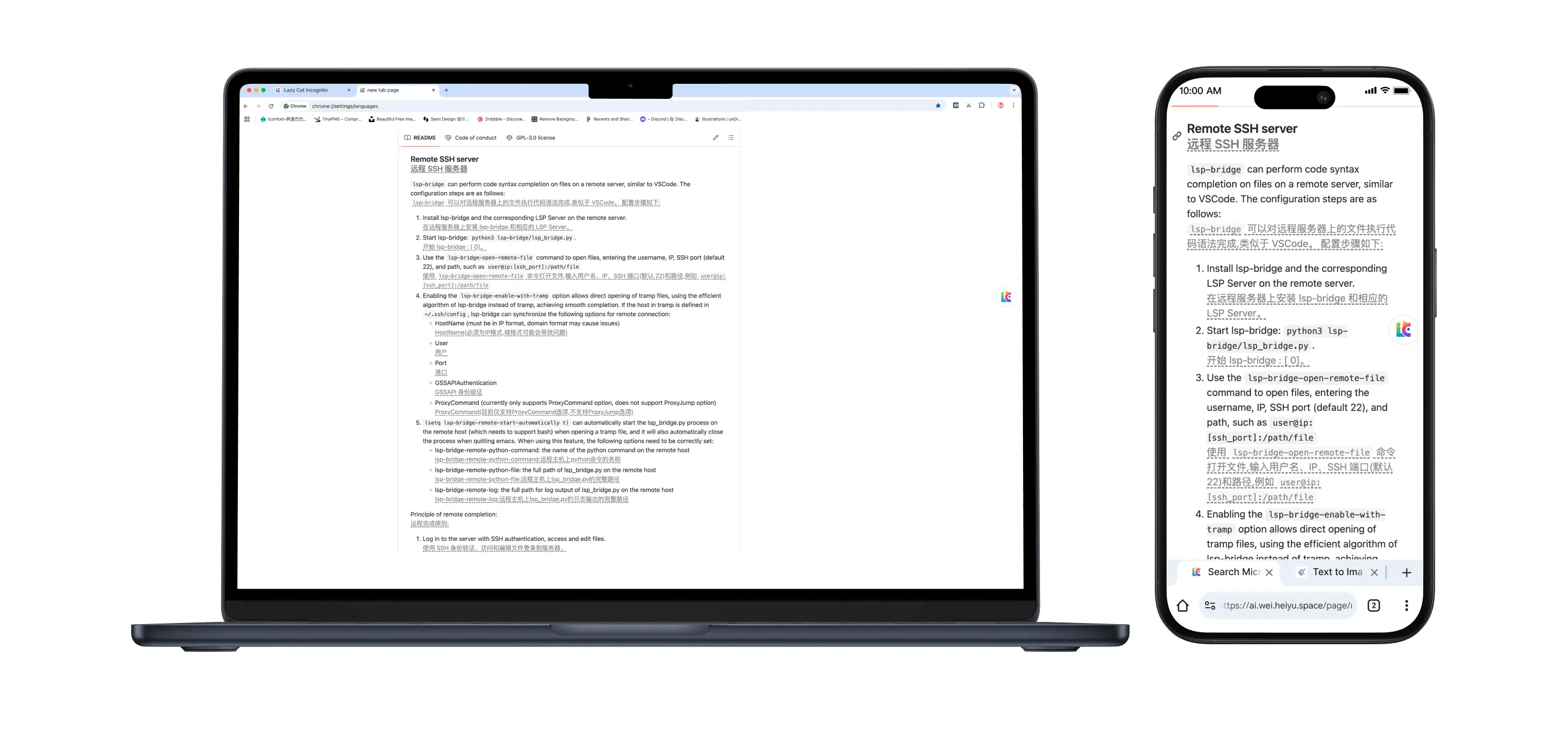

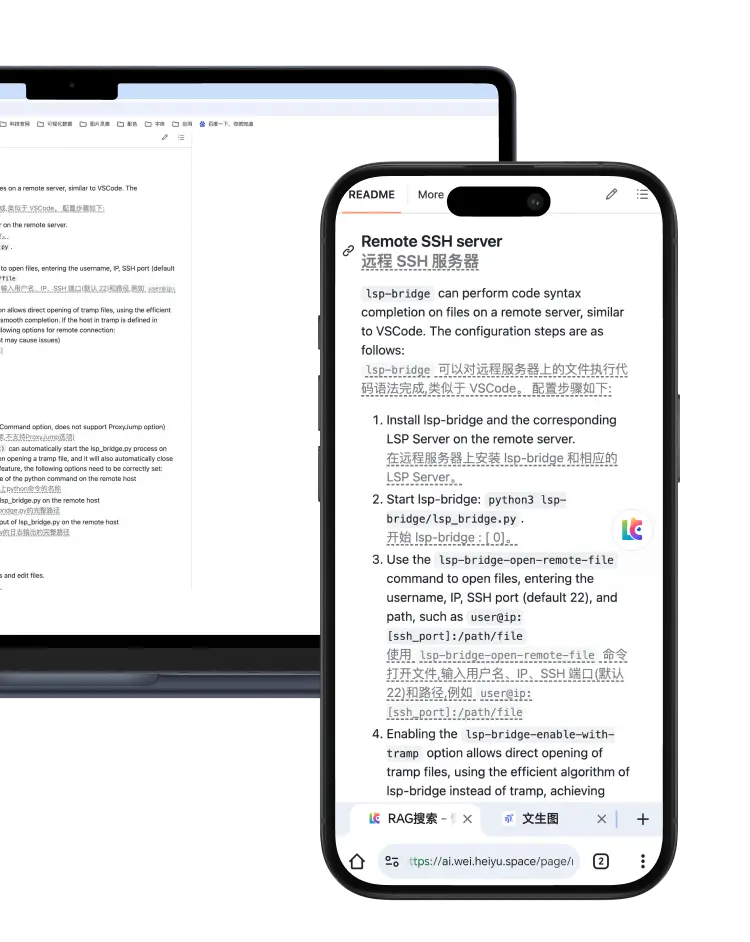

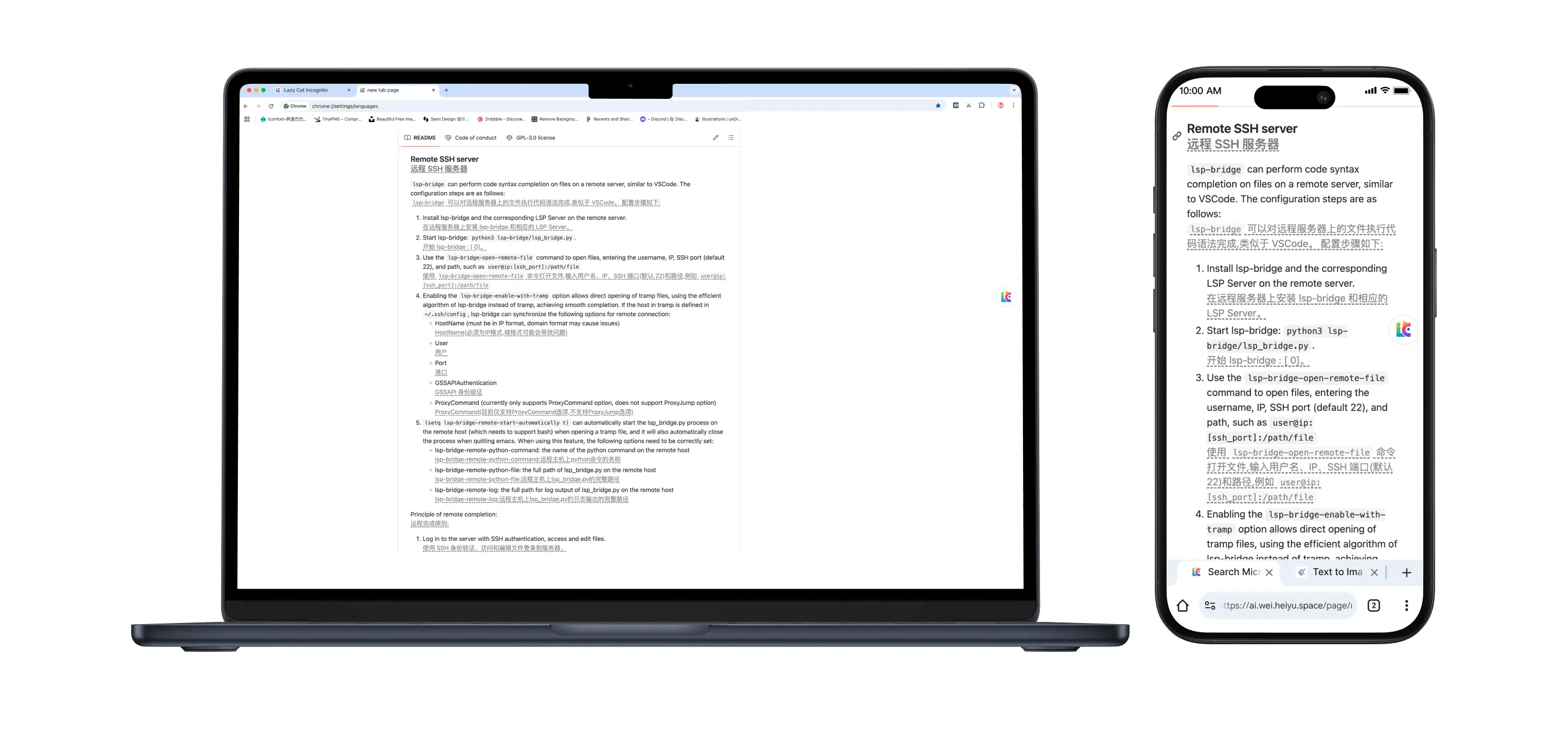

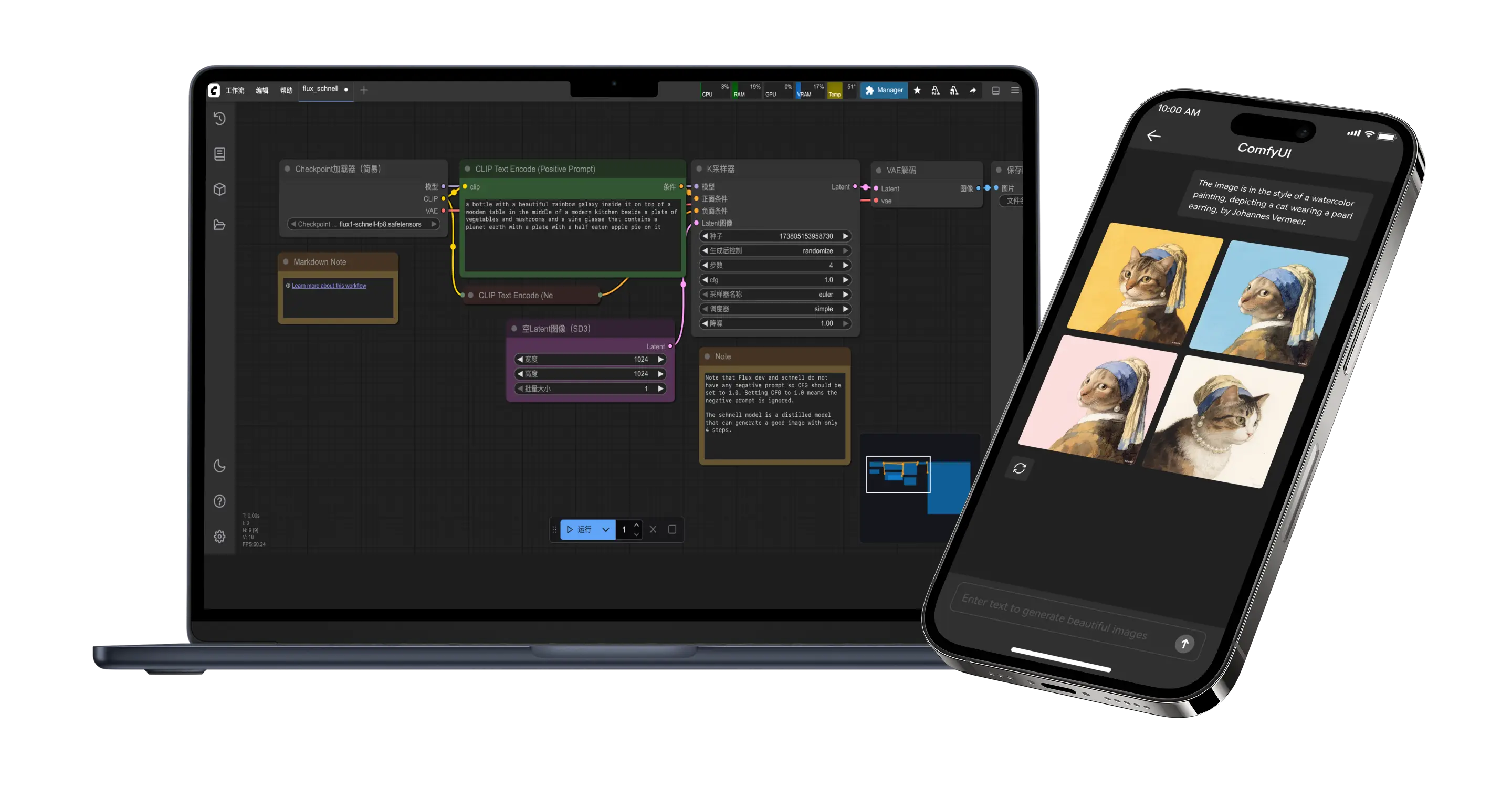

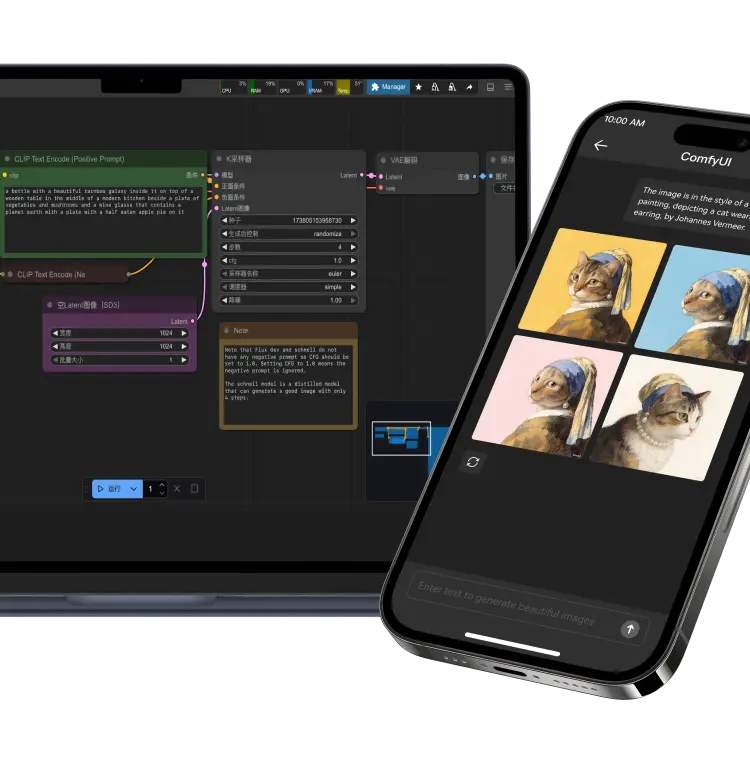

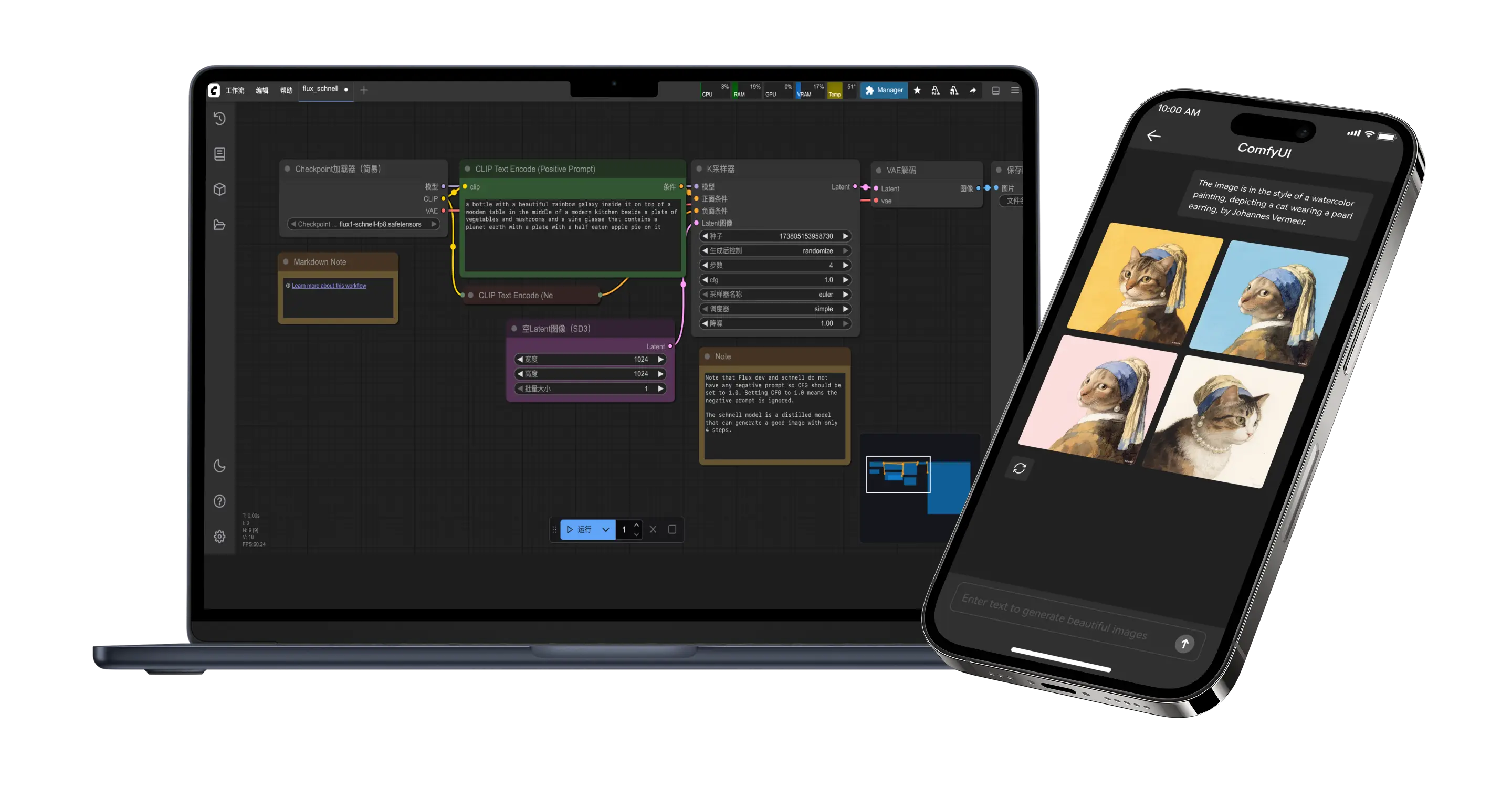

Supercomputer in Your Pocket

Combined with LCMD Microserver's unparalleled intranet penetration capability, your phone can directly access the AI computing power of the home AI Pod from outside. No need to buy expensive AI-chip upgraded versions for phones, computers, or tablets. One purchase of the AI Pod upgrades the AI computing power of all terminal devices—convenient and cost-effective.

Dedicated AI R&D Team

We are not selling cold machines, but warm companionship. As long as you have any AI needs, our AI R&D team will immediately follow up on the development. Buy hardware and get a super strong AI R&D customization service for free. Incredibly cost-effective.

Super Good-Looking

The exterior design is inspired by Star Wars Stormtroopers and Ferrari supercar streamlined design, exuding a minimalist sci-fi aesthetic reminiscent of starships. Not just a productivity tool, but also a sci-fi ornament for the AI cyber era. Supercomputer, supercar, super cool! Happy wherever you place it. It'll be a joy to put anywhere.

Top-notch Service in the Private Cloud Realm

7x18 hours frontline AI engineer technical support chat. Tech enthusiasts tinker with the AI Pod after work, learning AI technology. Come and have fun!

Why Choose LCMD AI Pod?

275T Super Computing Power

The NVIDIA 275T delivers veritable computing power and is compatible with the NVIDIA CUDA AI ecosystem, ensuring sufficient computing power. For non-NVIDIA CUDA AI chips, AI performance is only theoretical hardware performance. Due to the lack of support from the CUDA ecosystem, actual AI computing power is discounted by about 50%.

64GB Massive VRAM

Run 70B ~ 671B AI large models at home and have your own personal AI assistant. With 64GB of massive VRAM, it's designed for massive models, effortlessly running text-to-image and text-to-video large models.

Compatible with CUDA Ecosystem

NVIDIA chips offer optimal CUDA support. AI large models and software run seamlessly out-of-the-box once downloaded. Non-CUDA AI chips require months of adaptation after model download just to test. In the AI era, falling behind by a day means falling behind forever.

Get Started with AI in 5 Minutes

Pre-installed with the Ubuntu system, compatible with CUDA acceleration drivers and AI development environments, and combined with the self-developed AI applications of LCMD Microservers, it only takes 5 minutes for beginners to go from unboxing to running the AI model. AI learning has been shortened from several months to just 5 minutes, alleviating anxiety and keeping up with the AI era.

Bring Your AI Lab Home

It offers computing power comparable to small workstations and supports research-level computing. It has been purchased by AI laboratories of many universities (such as Wuhan University, Huazhong University of Science and Technology, Shantou University, Xiamen University, Peking University, etc.) for cutting-edge AI researchAI projects. Value-added tax invoices can be issued and a 3-year technical after-sales service is provided. Apply for educational discounts and come to accelerate your research progress!

Unlock All Your AI Potential with One Device

Full-scenario coverage from model development, application deployment to content creation. AI applications include: web search, RAG search, text-to-image, text-to-video, web summarization, AI input, web screenshot to text, audio/video summarization, web text-to-speech, video to text, etc., all with one-click installation without configuration, grasp AI trends in real-time. Supports popular AI inference frameworks like Ollama, vllm, Shimmy. (Note: The AI software needs to be bunbled purchase with LCMD Microserver).

Say Goodbye to Cloud Queues and Expensive Bills

The bills for cloud GPU devour budgets like a bottomless pit. With a one-time investment in an AI Pod, you gain a private local AI supercomputer. Compared with mainstream AI cloud services, the annual AI subscription fees for over 10 applications easily exceed 20,000 RMB. Calculate your subscription fee and see how much you can save in a year?

Your Data Belongs Only to You

Core code, trade secrets, private datasets...Are you really at ease uploading them to the cloud? Localized deployment eliminates the privacy risks of uploading data to the cloud. Physical isolation meets the strictest data security requirements. Own it now and protect your core digital assets.

Lead the Way Starts with by Owning Your Own Computing Engine

In the era of private AI supercomputing, the moat is your unique industry knowledge and data. Owning a private AI supercomputer lets you validate ideas one step faster than others. Founding edition users have already successfully developed AI applications with the AI Pod. Take action, and join the leaders now!

Money-Making Tools

The AI Pod is specifically designed for AI model inference, with a total power consumption of only 64W. It only takes 4 seconds to generate one image, and 20,000 images can be produced in 24 hours a day, with a daily electricity cost of less than 0.3 RMB. Not only does it save you AI subscription fees, it's also a money-making tool.

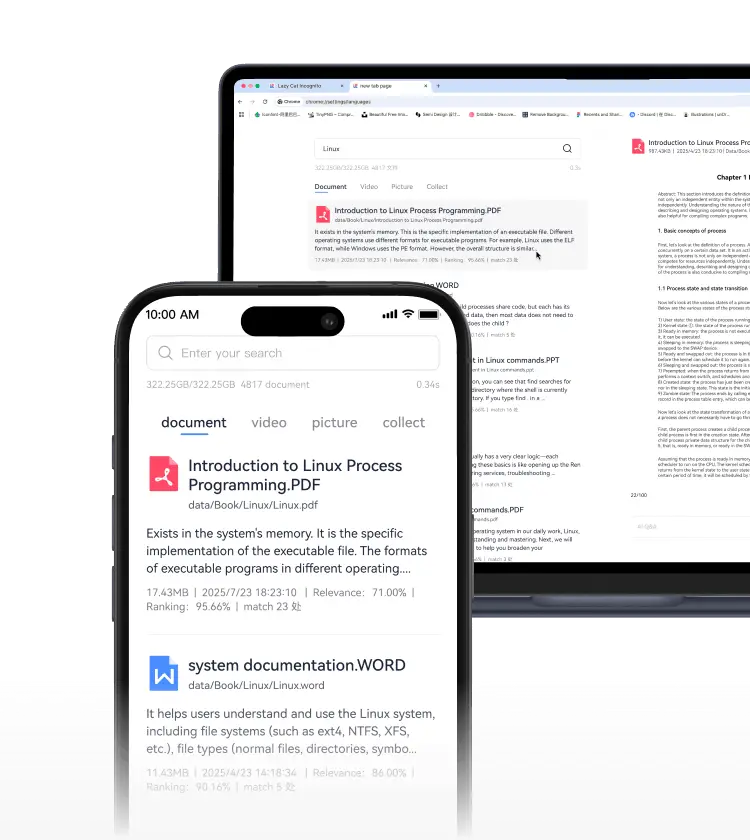

Supercomputer in Your Pocket

Combined with LCMD Microserver's unparalleled intranet penetration capability, your phone can directly access the AI computing power of the home AI Pod from outside. No need to buy expensive AI-chip upgraded versions for phones, computers, or tablets. One purchase of the AI Pod upgrades the AI computing power of all terminal devices—convenient and cost-effective.

Dedicated AI R&D Team

We are not selling cold machines, but warm companionship. As long as you have any AI needs, our AI R&D team will immediately follow up on the development. Buy hardware and get a super strong AI R&D customization service for free. Incredibly cost-effective.

Super Good-Looking

The exterior design is inspired by Star Wars Stormtroopers and Ferrari supercar streamlined design, exuding a minimalist sci-fi aesthetic reminiscent of starships. Not just a productivity tool, but also a sci-fi ornament for the AI cyber era. Supercomputer, supercar, super cool! Happy wherever you place it. It'll be a joy to put anywhere.

Top-notch Service in the Private Cloud Realm

7x18 hours frontline AI engineer technical support chat. Tech enthusiasts tinker with the AI Pod after work, learning AI technology. Come and have fun!

275T Super Computing Power

64GB Massive VRAM

64GB Massive VRAM

70B ~ 671B Large Model

Unlimited Tokens

Unlimited Tokens

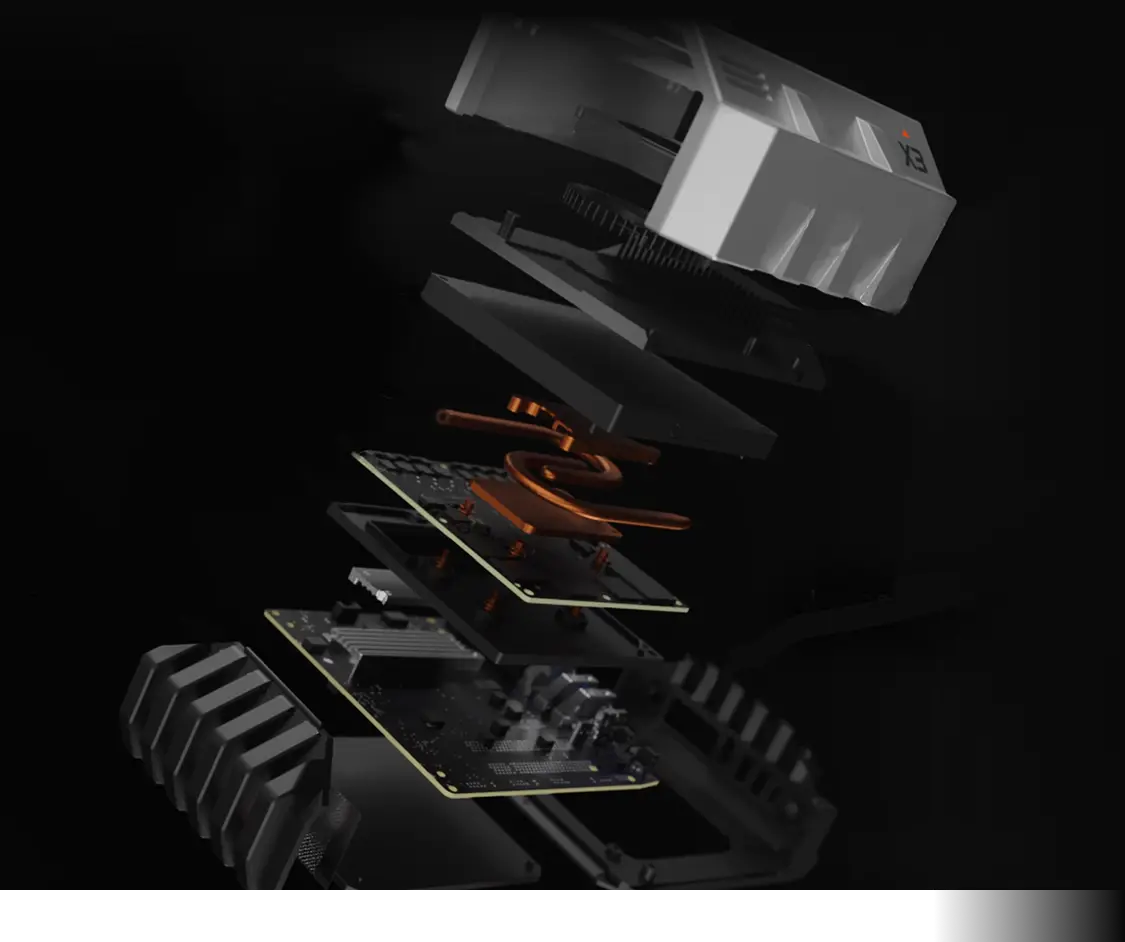

Nvidia Jetson Orin 64GB

NVIDIA Chip 275T Veritable Computing Power

64

Tensor Core

2048

Cores

1.3 GHz

Max

Built-in CPU Saves You Money

ARM Cortex®-A78AE CPU

12

Cores

2.0 GHz

Max

Super VRAM, Enough at Once

LPDDR5 204.8GB/s

Up to 64G

All-SSD, Top Performance

Model Storage 32TB

Max 2xM.2 Slots

System Storage 64GB

eMMC 5.1

Compatible with CUDA Ecosystem, No Hassle

Private Deployment 70B ~ 671B Large Model

Dual LAN Ports, More Powerful

1xHDMI、1xTypeC

2xUSB 3.2

Dual LAN Ports (10G/2.5G)

Super Power-Efficient

64W

Focused on Inference, Extremely Quiet

视频 AI 处理能力

275T Super Computing Power,64GB Massive VRAM

70B ~ 671B Large Model,Unlimited Tokens

70B ~ 671B Large Model,Unlimited Tokens

Nvidia Jetson Orin 64GB

NVIDIA Chip 275T Veritable Computing Power

64

Tensor Core

2048

Cores

1.3 GHz

Max

Built-in CPU Saves You Money

ARM Cortex®-A78AE CPU

12

Cores

2.0 GHz

Max

Super VRAM, Enough at Once

LPDDR5 204.8GB/s

Up to 64G

Compatible with CUDA Ecosystem, No Hassle

Private Deployment 70B ~ 671B Large Model

All-SSD, Top Performance

Model Storage 32TB

Max 2xM.2 Slots

System Storage 64GB

eMMC 5.1

Dual LAN Ports, More Powerful

1xHDMI、1xTypeC

2xUSB 3.2

Dual LAN Ports (10G/2.5G)

Super Power-Efficient

64W

Focused on Inference, Extremely Quiet

视频 AI 处理能力

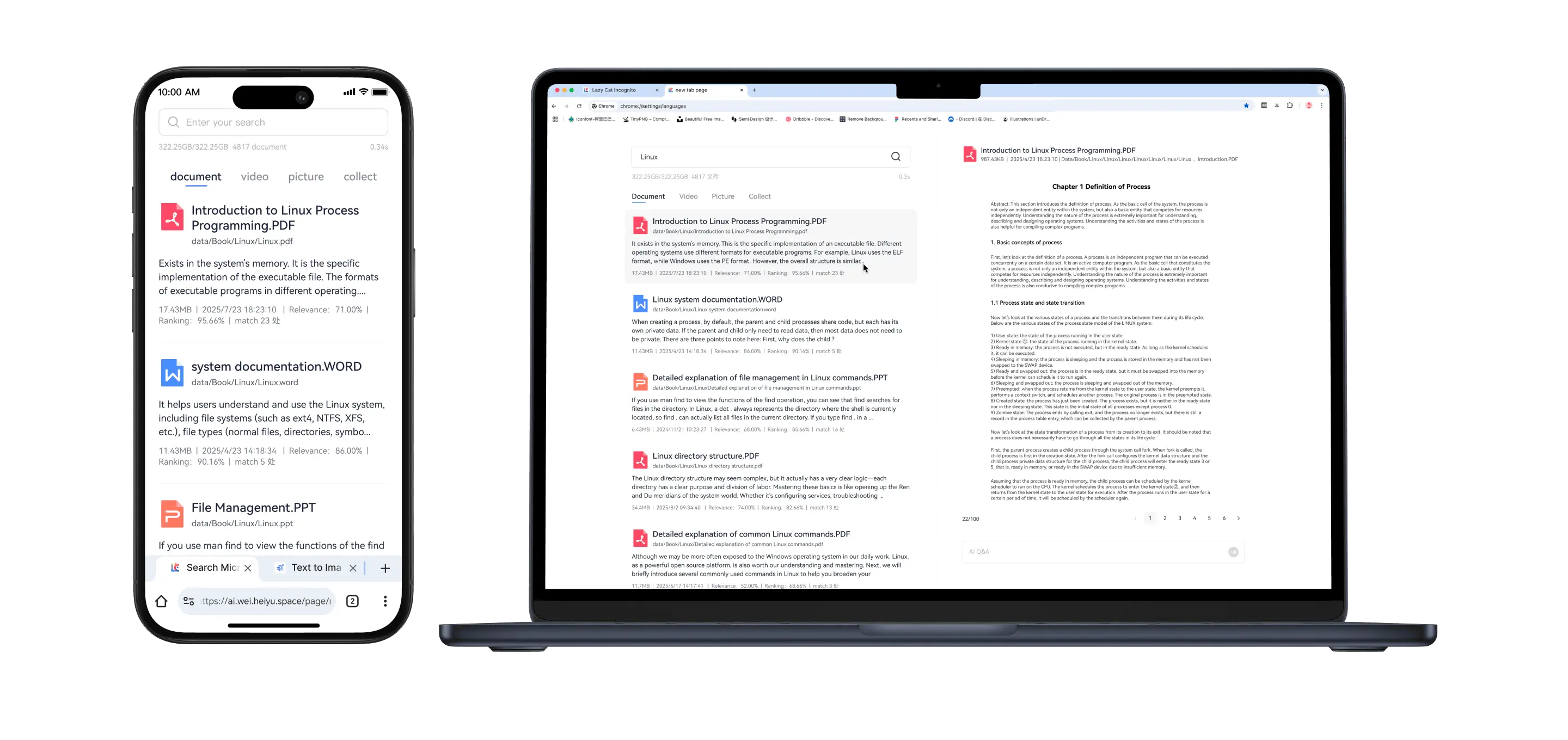

Search Within the LCMD MicroServer for 100% Privacy and Security

Search millions of files in 1 second, search videos, office documents and images, office efficiency increased by 1000 times.

Search Within the LCMD MicroServer for 100% Privacy and Security

Search millions of files in 1 second, search videos, office documents and images, office efficiency increased by 1000 times.

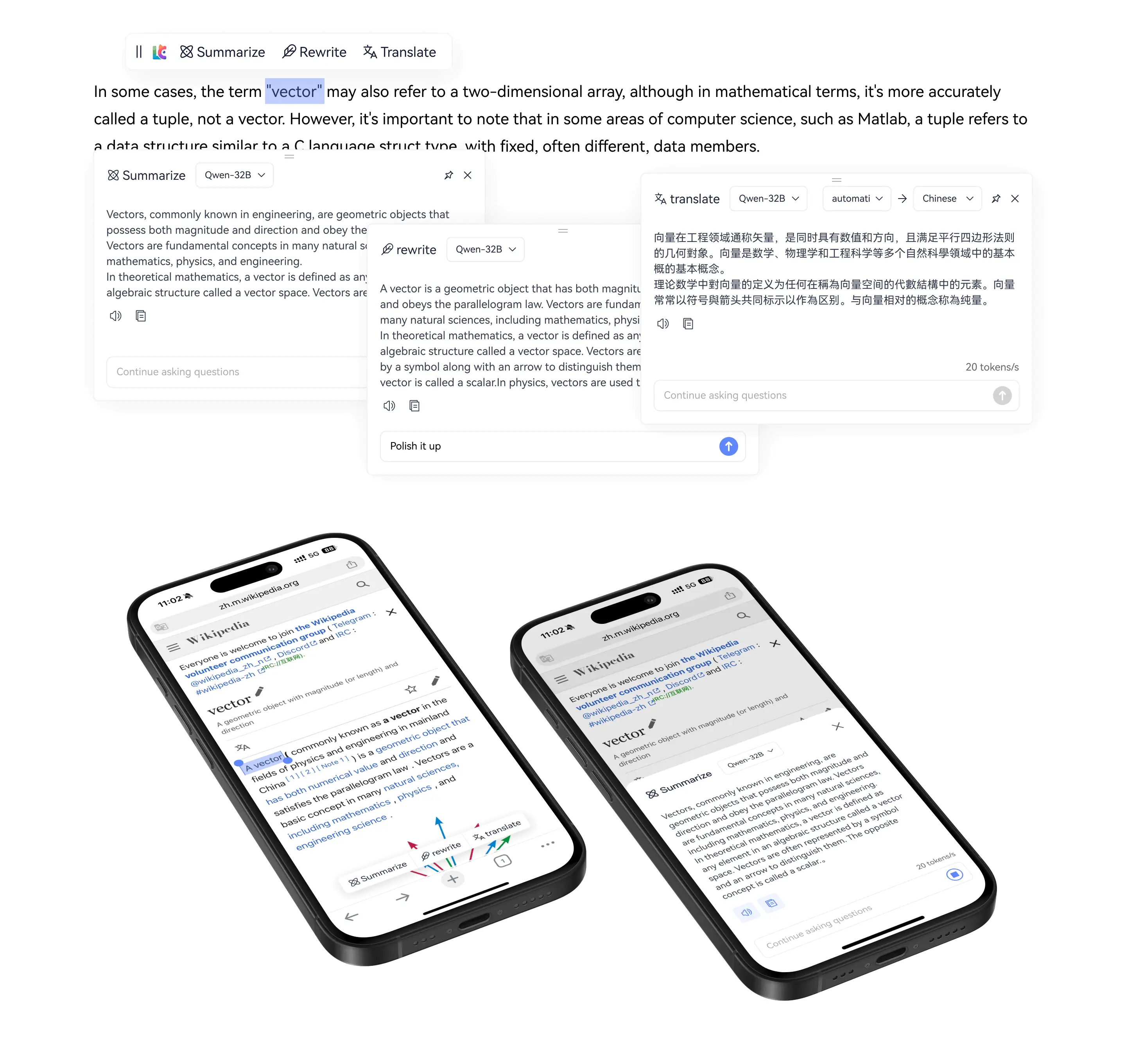

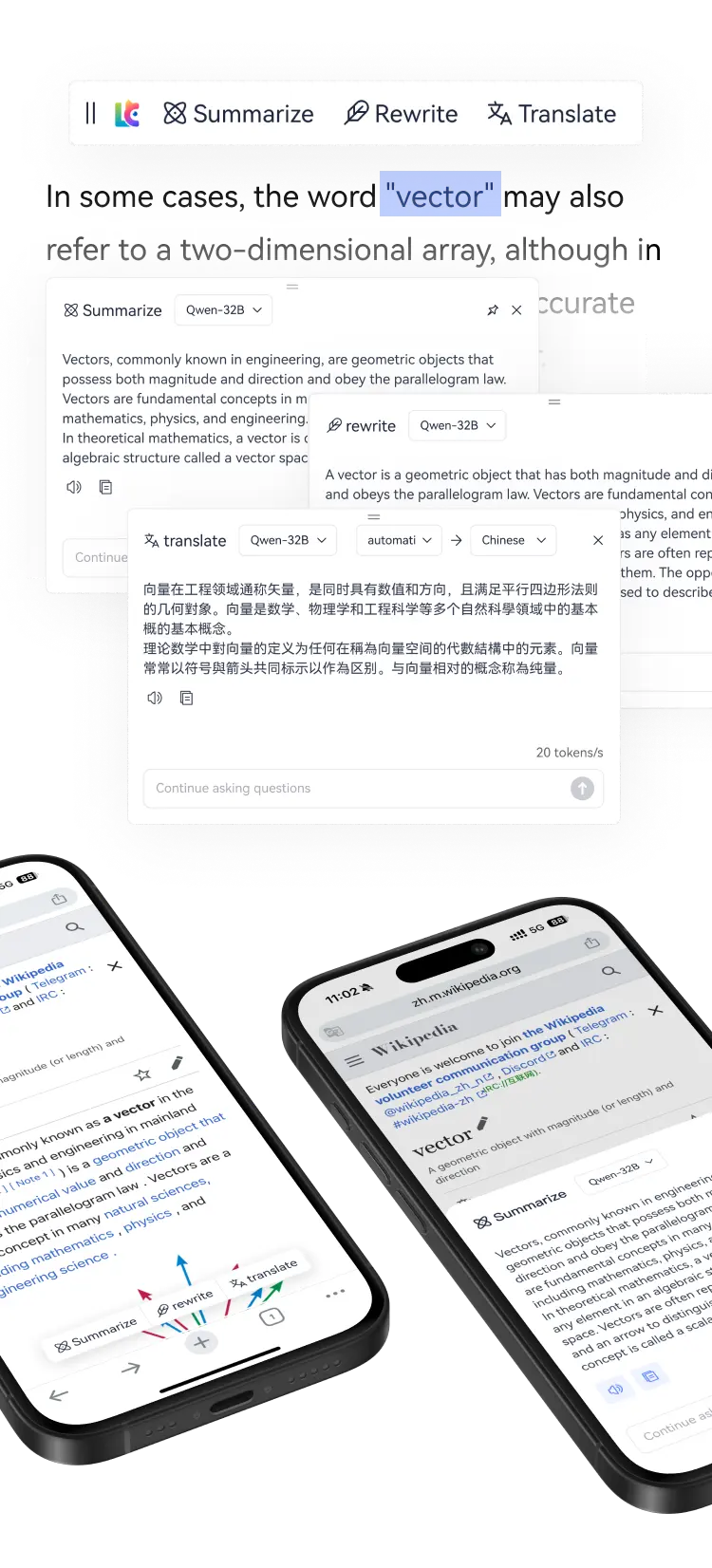

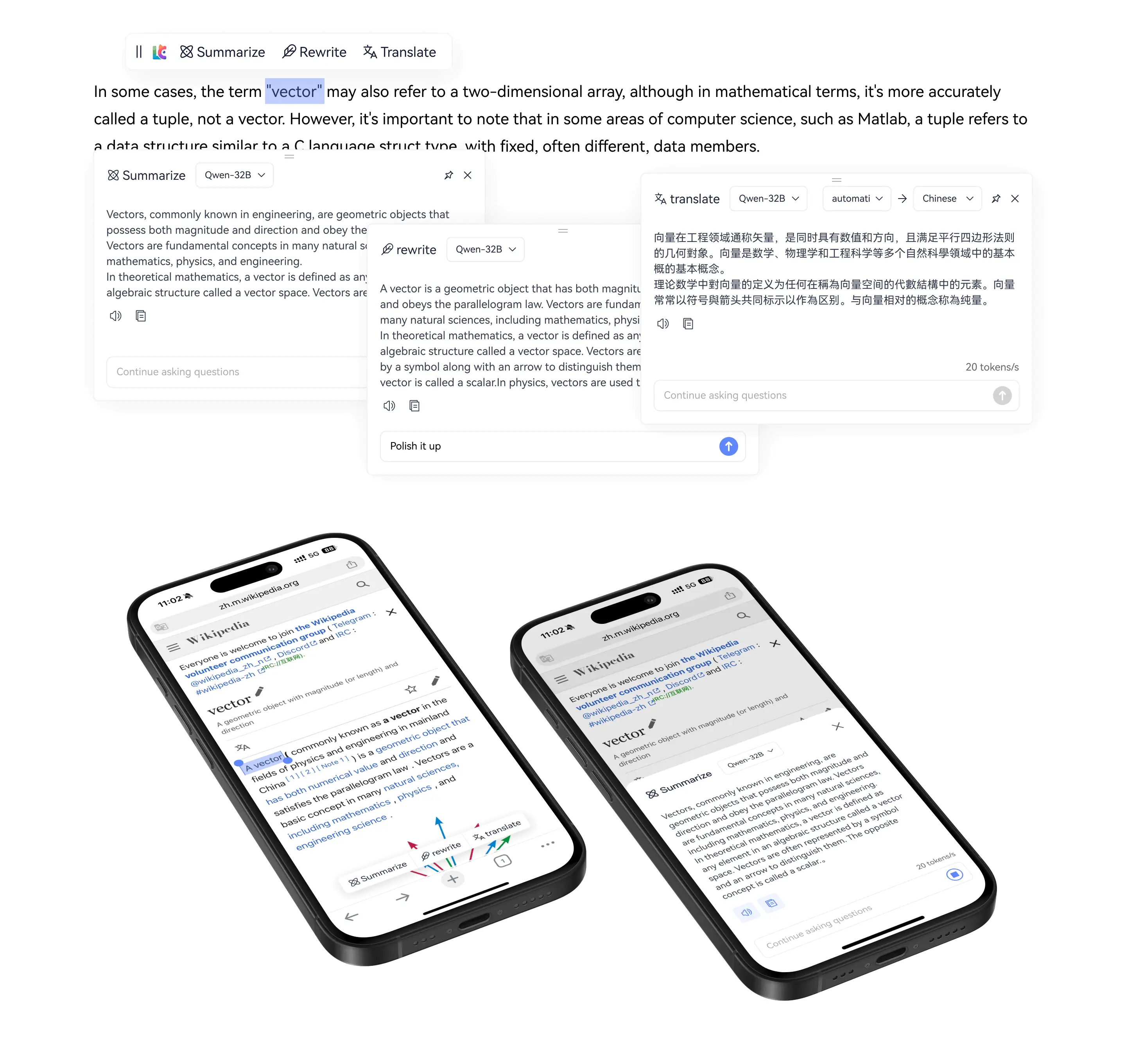

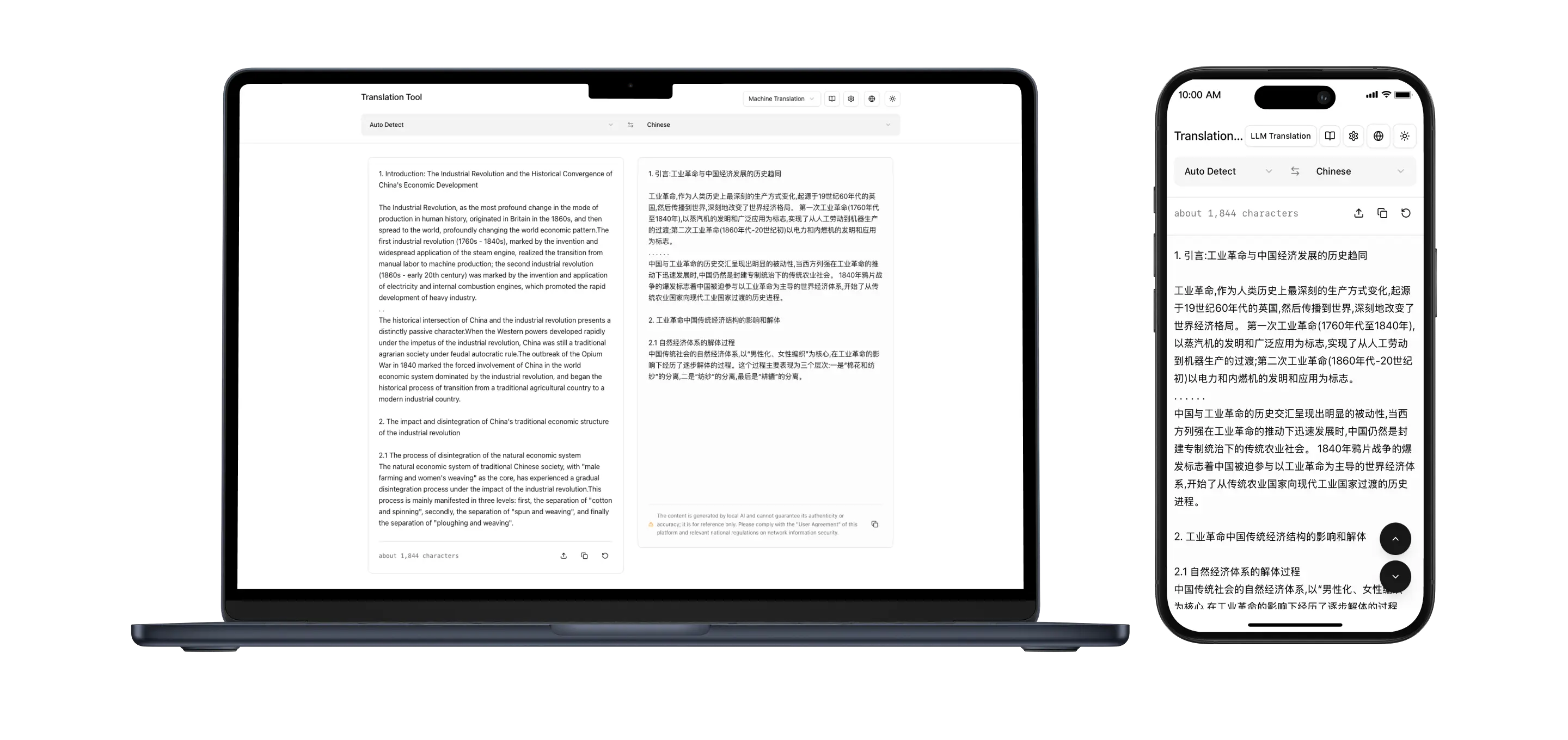

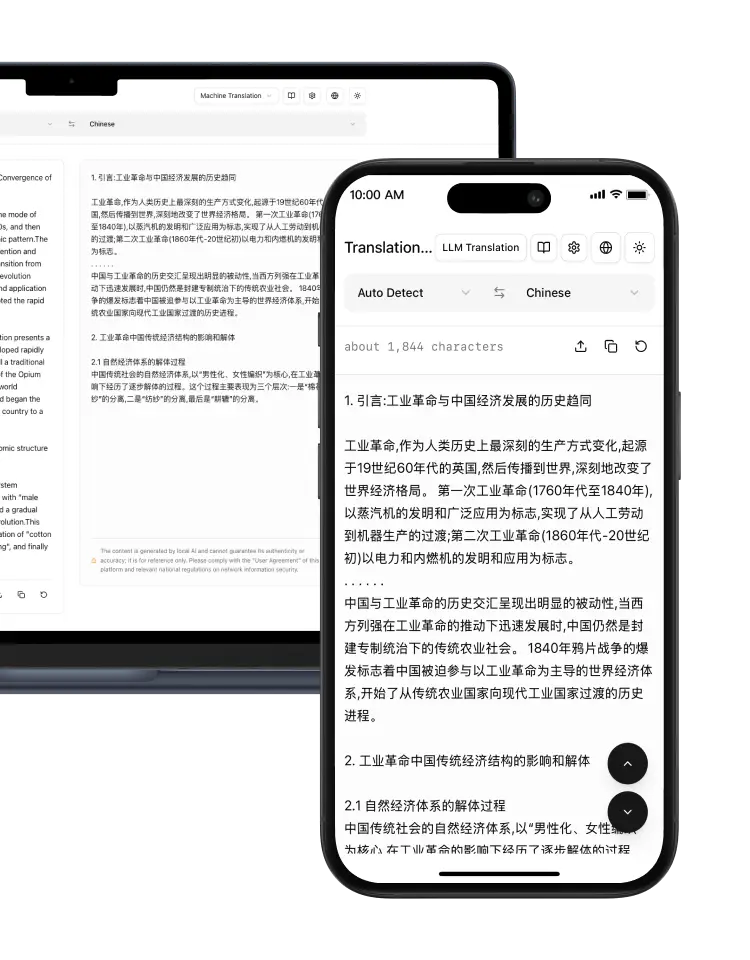

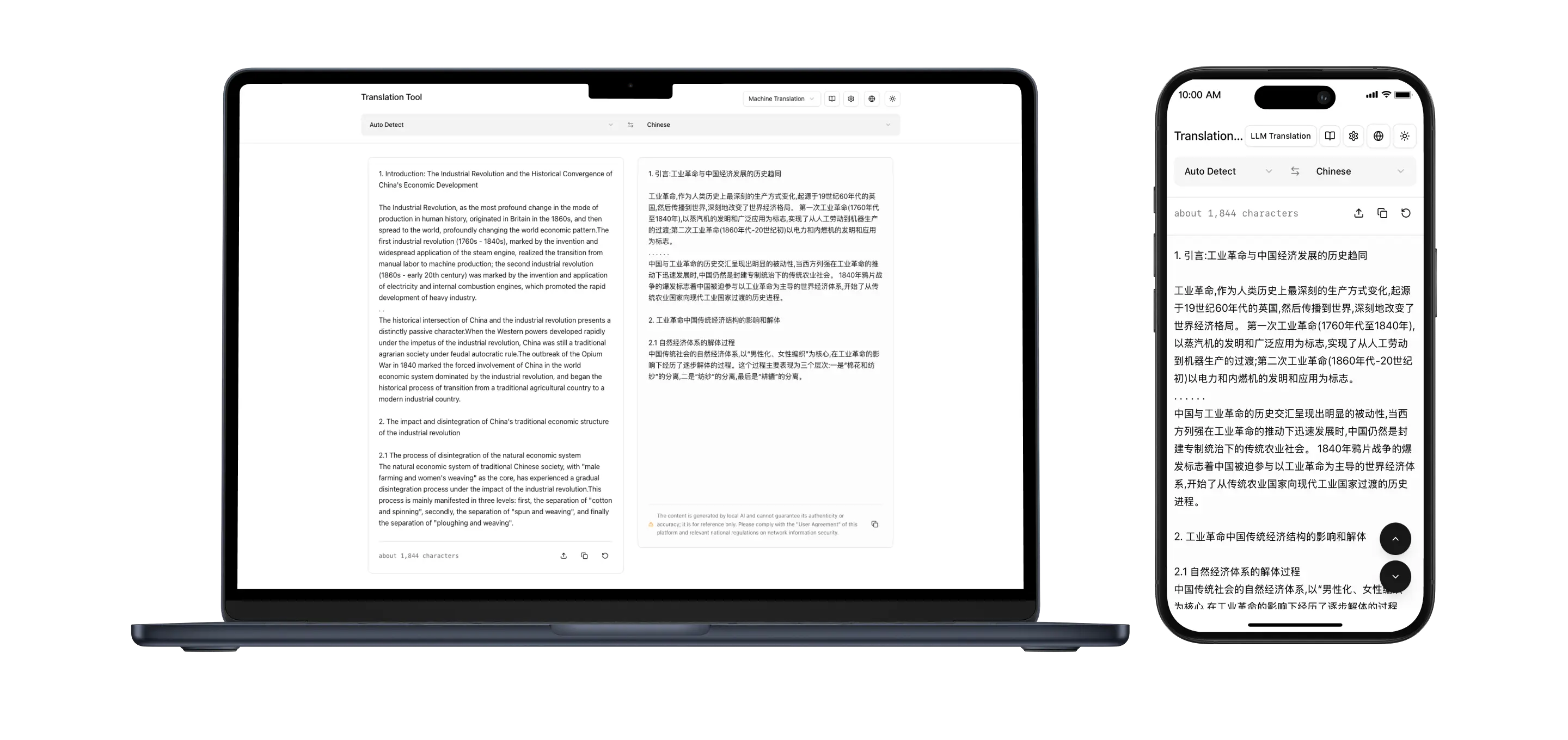

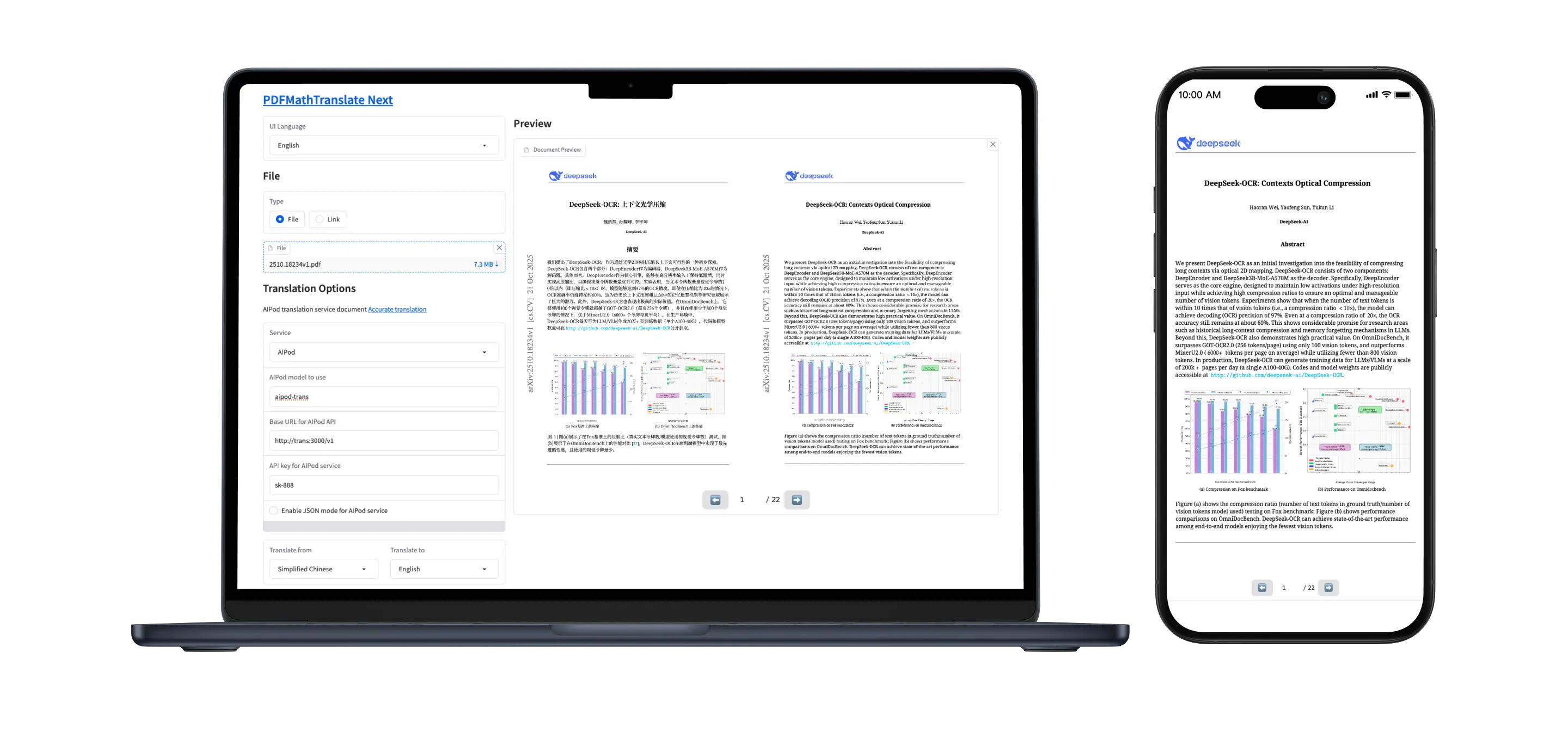

Immersive AI Translation, Super Fast

5ms ultra-low latency translation, read and translate immediately, unlimited free translation

Immersive AI Translation, Super Fast

5ms ultra-low latency translation, read and translate immediately, unlimited free translation

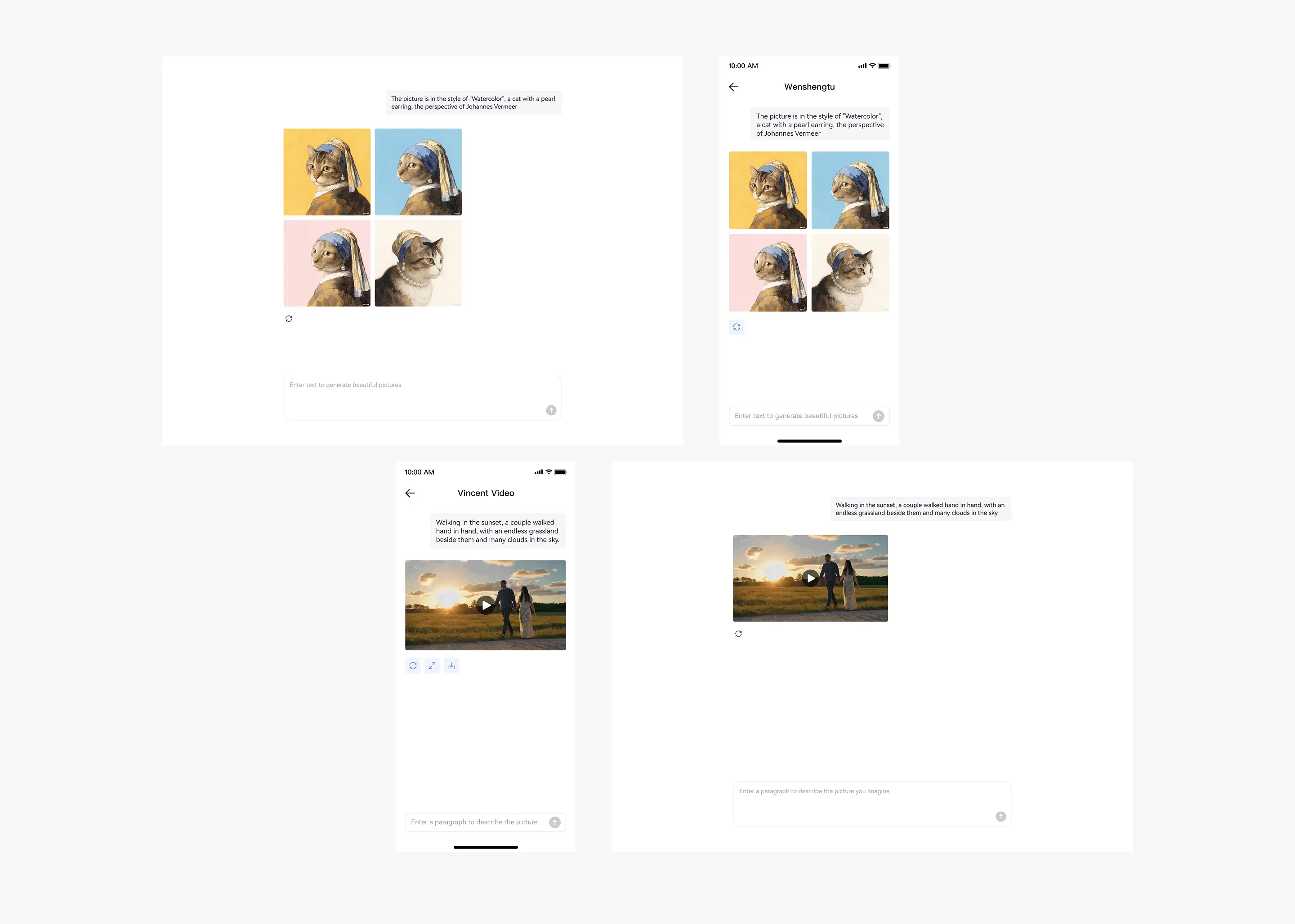

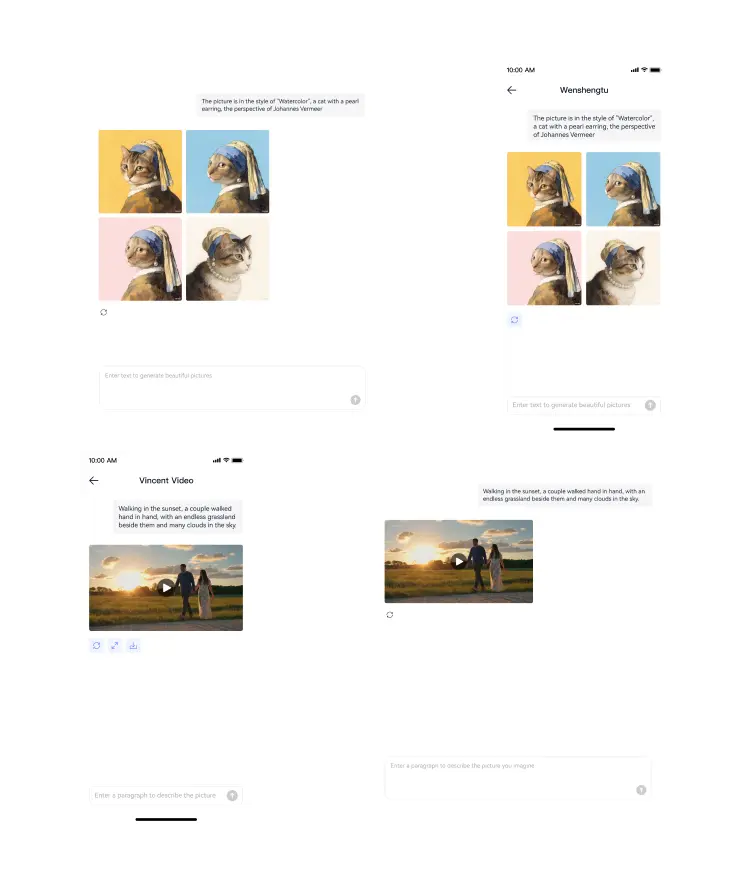

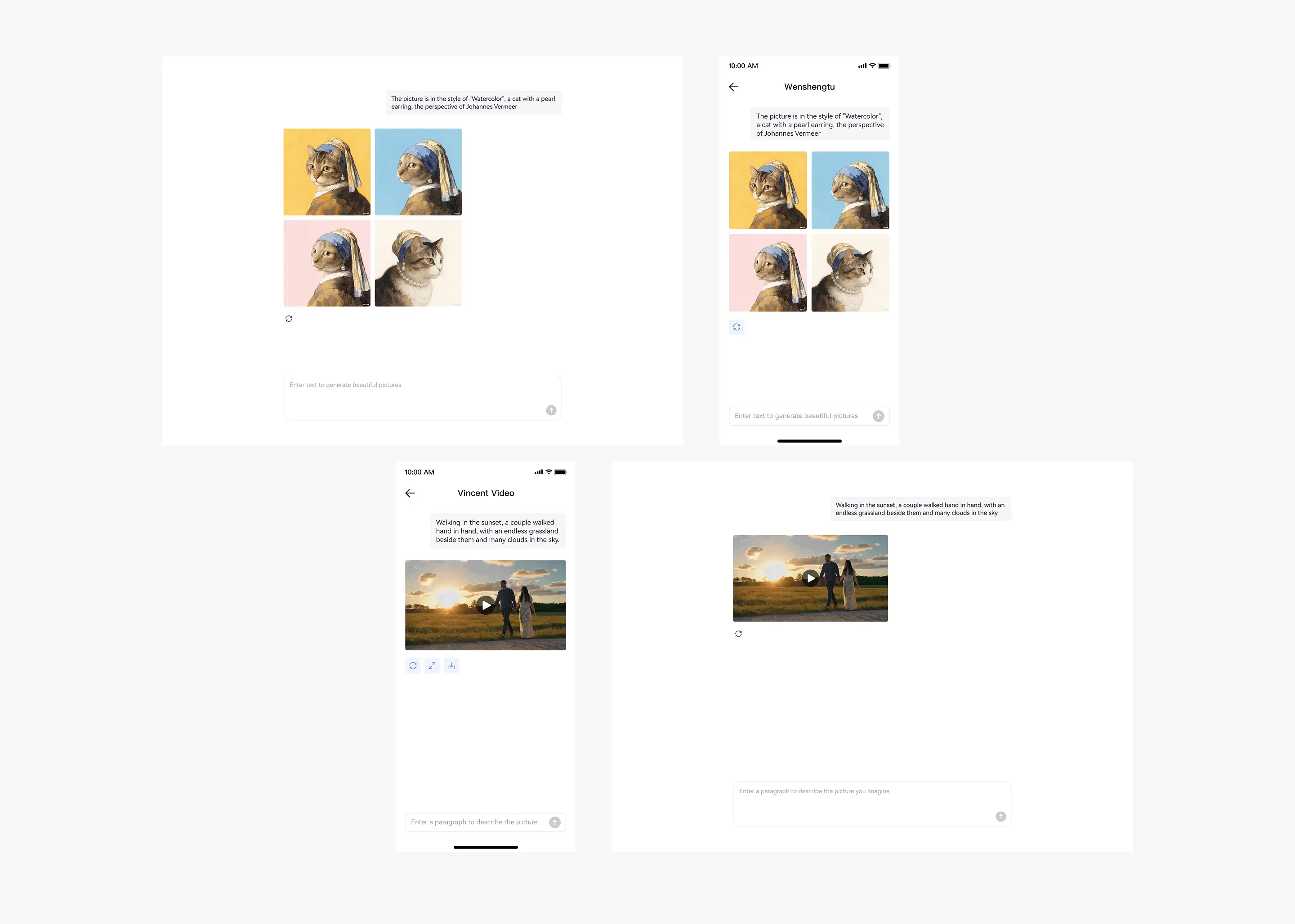

Text-to-Video Large Model Requires 64GB VRAM to Run

Supports text-to-image and text-to-videoIt only takes 4 seconds to generate one image, and 20,000 images can be produced in 24 hours a day, with a daily electricity cost of less than 0.3 RMB.

Text-to-Video Large Model Requires 64GB VRAM to Run

Supports text-to-image and text-to-videoIt only takes 4 seconds to generate one image, and 20,000 images can be produced in 24 hours a day, with a daily electricity cost of less than 0.3 RMB.

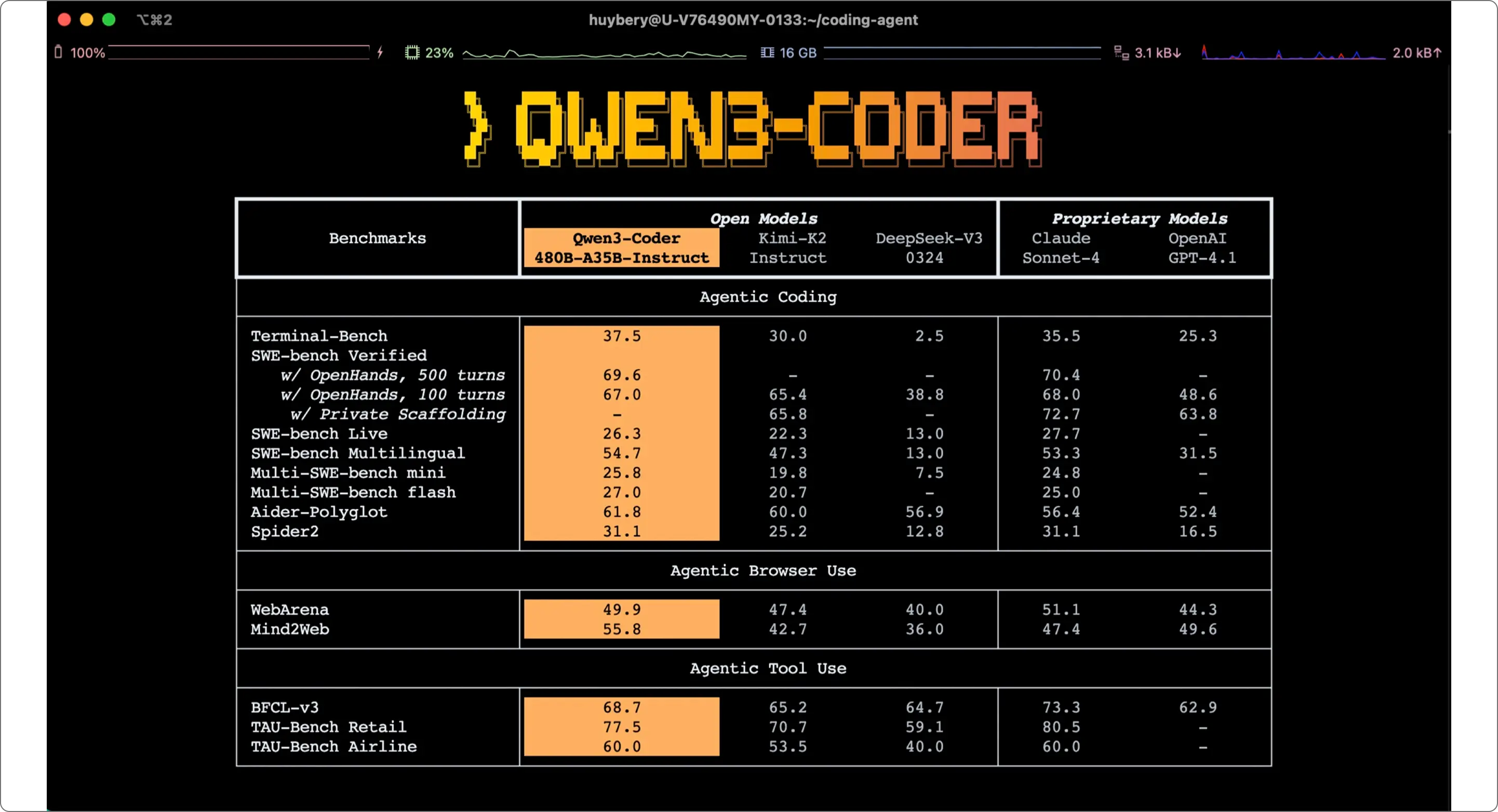

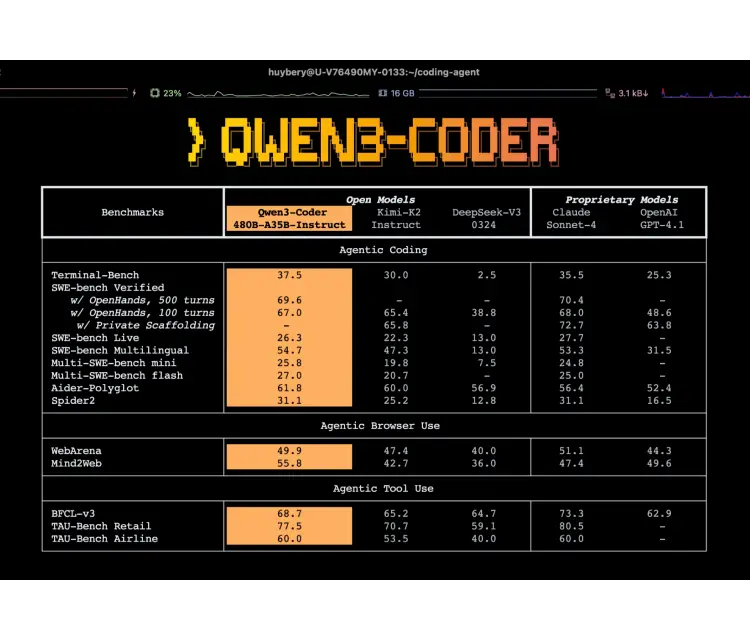

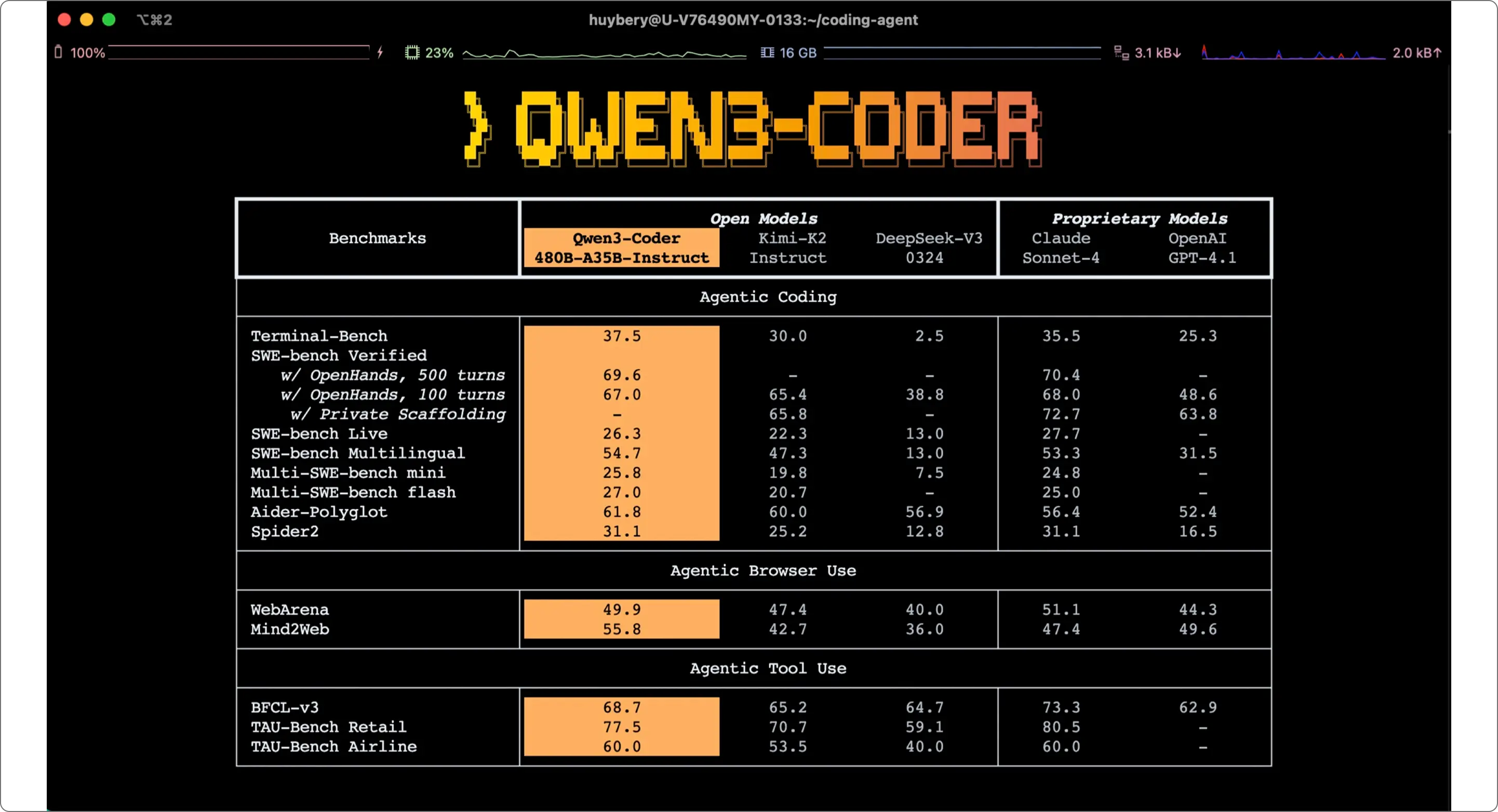

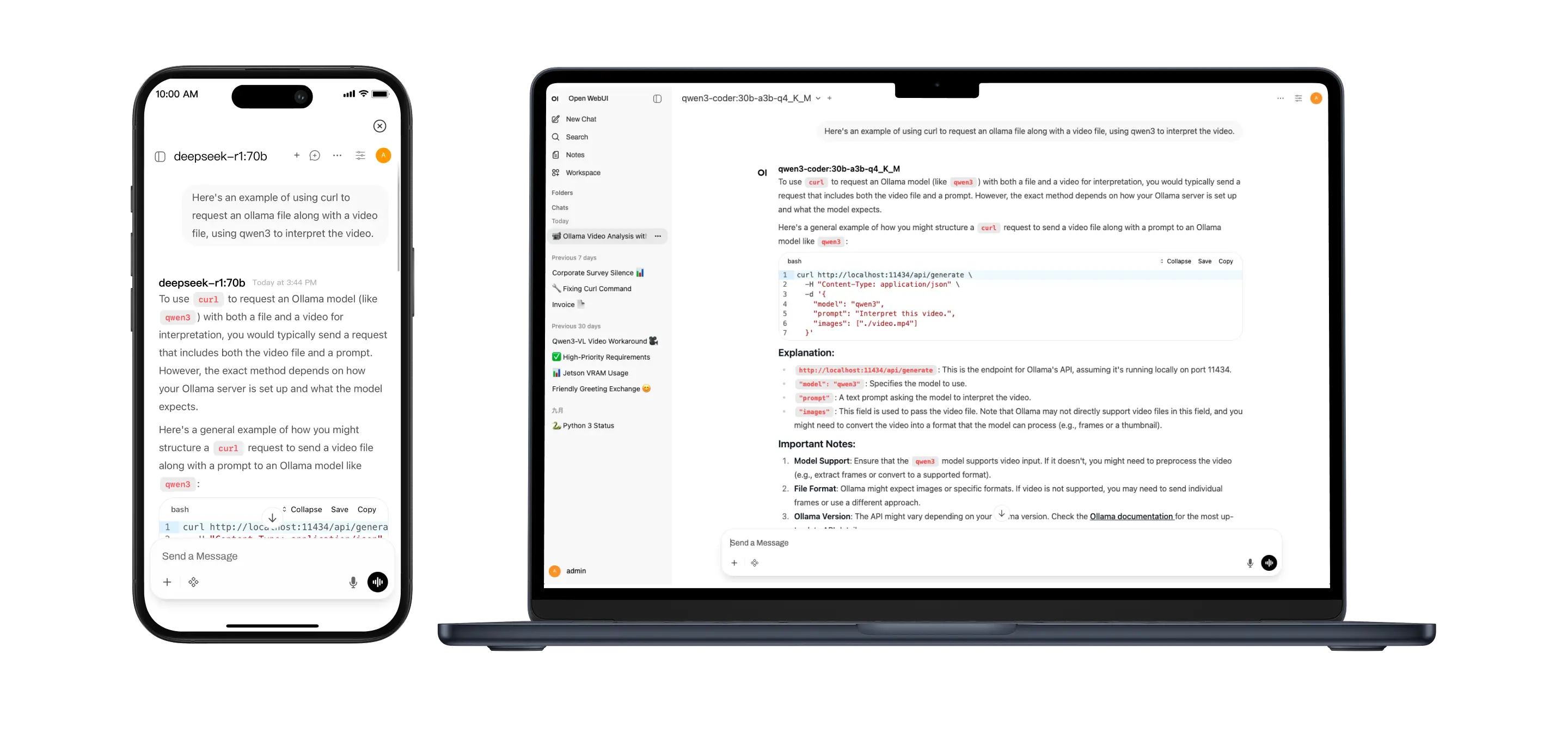

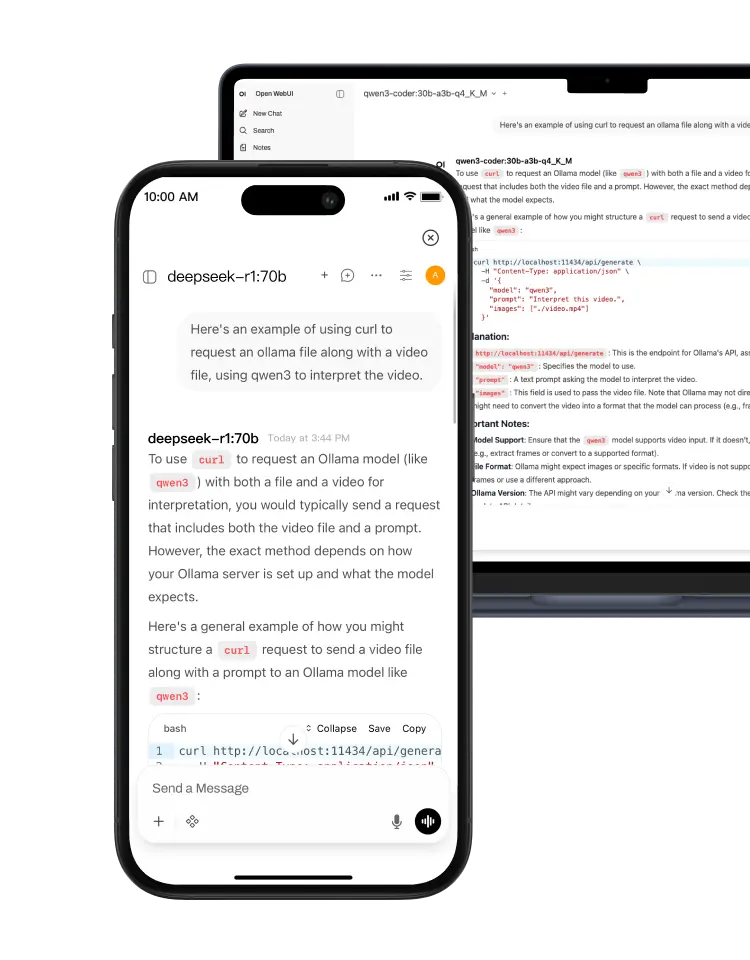

AI Writes Code, Unlimited Tokens

Qwen3-Coder:30B, 31.84 Tokens/s,1024K Context

AI Writes Code, Unlimited Tokens

Qwen3-Coder:30B, 31.84 Tokens/s 1024K Context

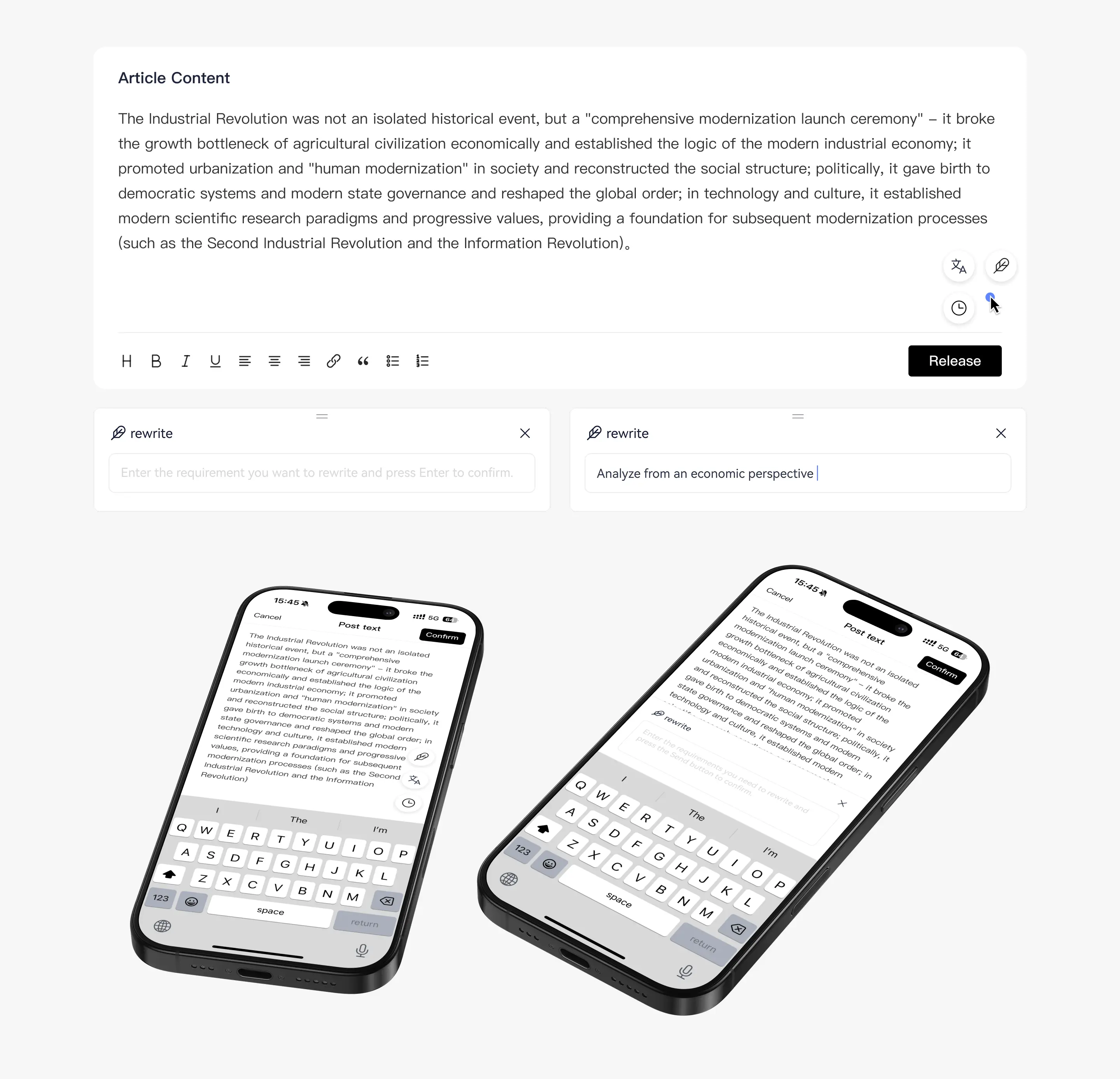

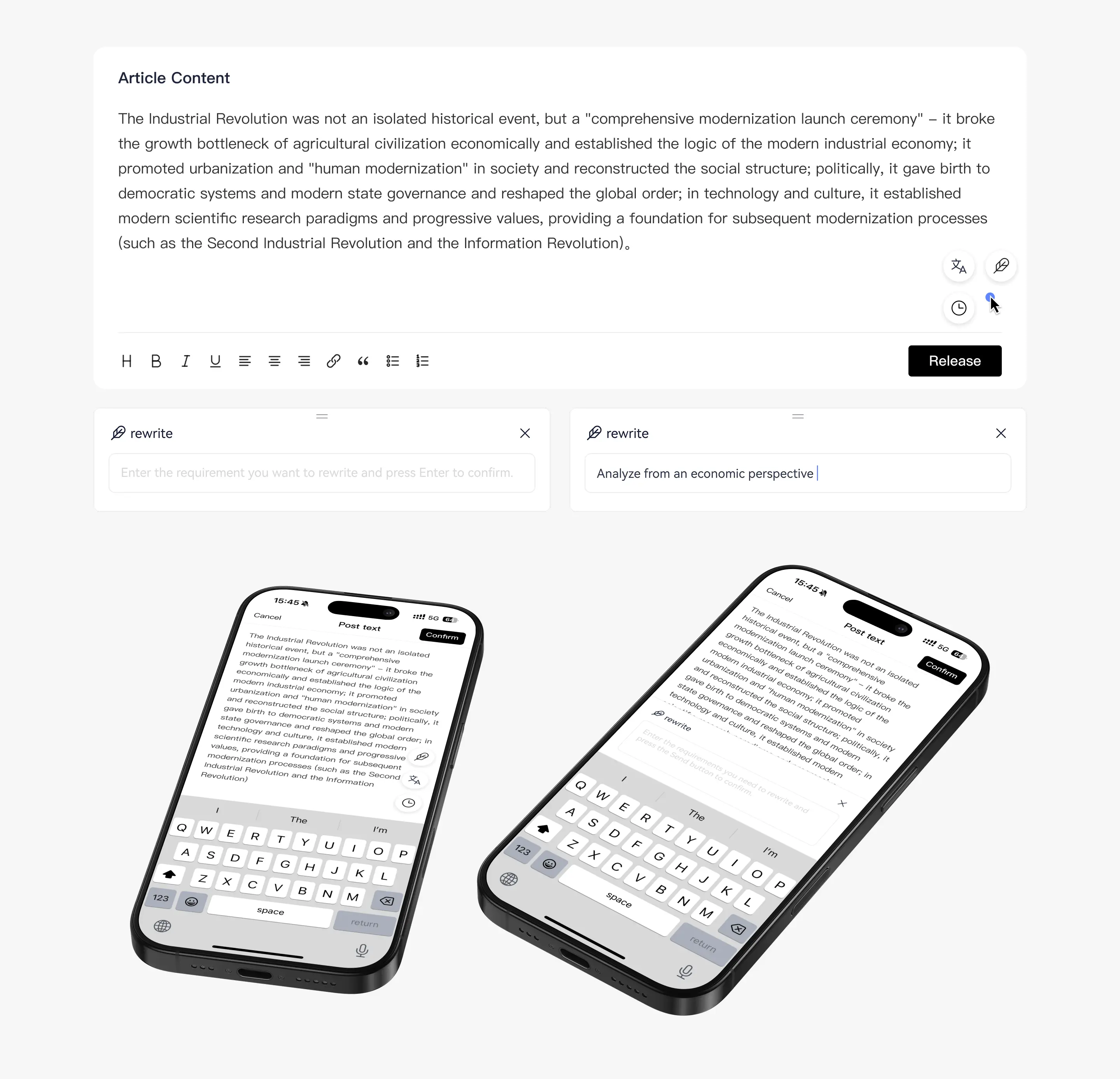

Web AI Summary

No AI subscription needed, Unlimited AI research, supports web page summary, rewriting and translation

Web AI Summary

No AI subscription needed

Unlimited AI research

Supports web page summary, rewriting and translation

Unlimited AI research

Supports web page summary, rewriting and translation

AI Input Box

Any web input box, type Chinese, press the space bar three times, and it will be automatically translated into English, Spanish, Russian, etc. A great helper for cross-border e-commerce.

AI Input Box

Any web input box, type Chinese, press the space bar three times, and it will be automatically translated into English, Spanish, Russian, etc. A great helper for cross-border e-commerce.

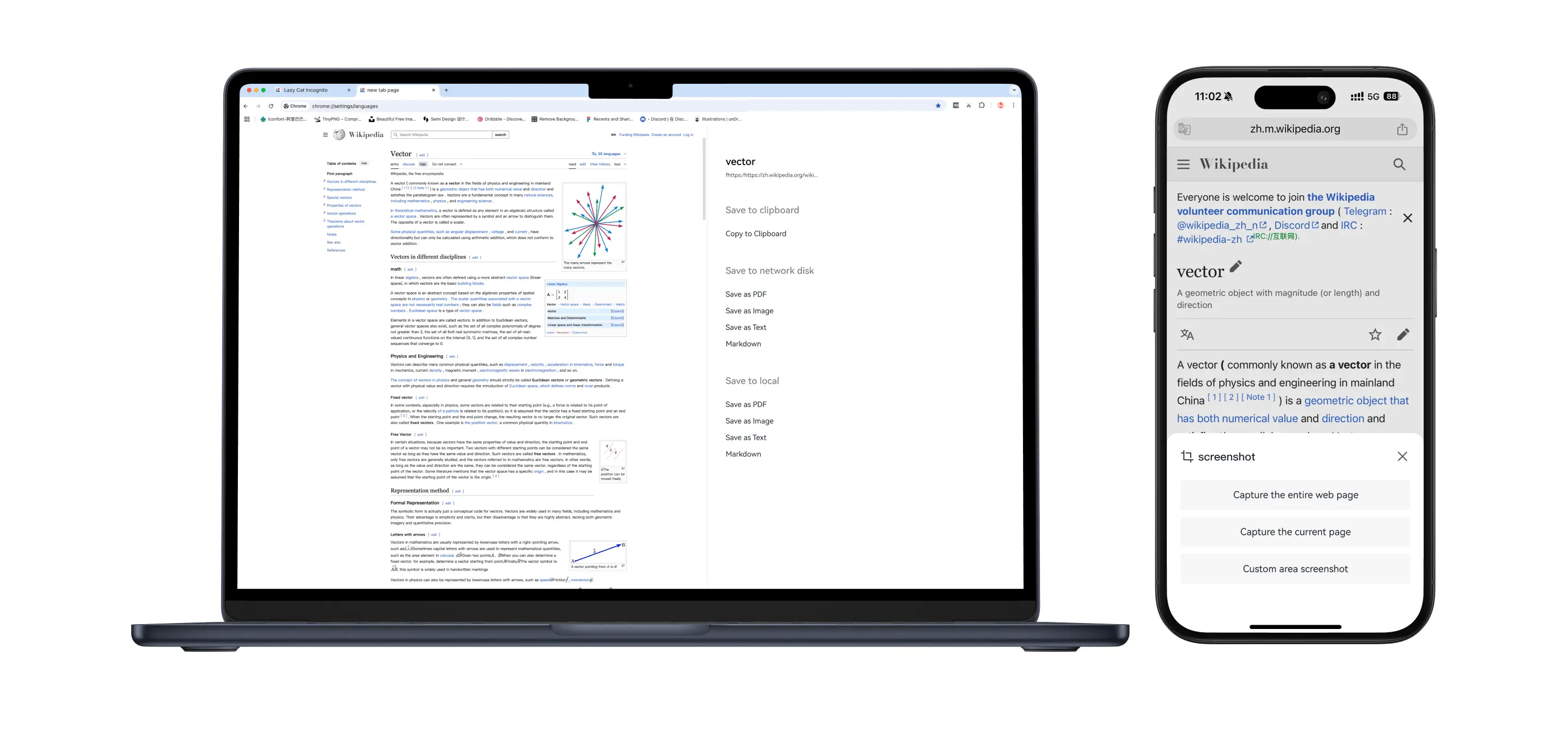

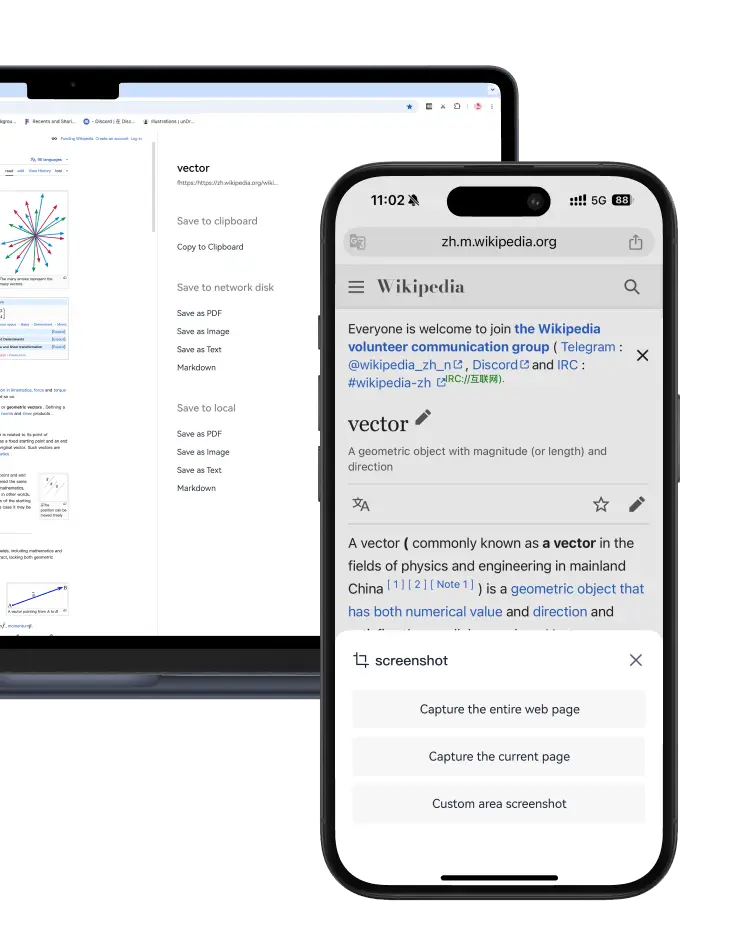

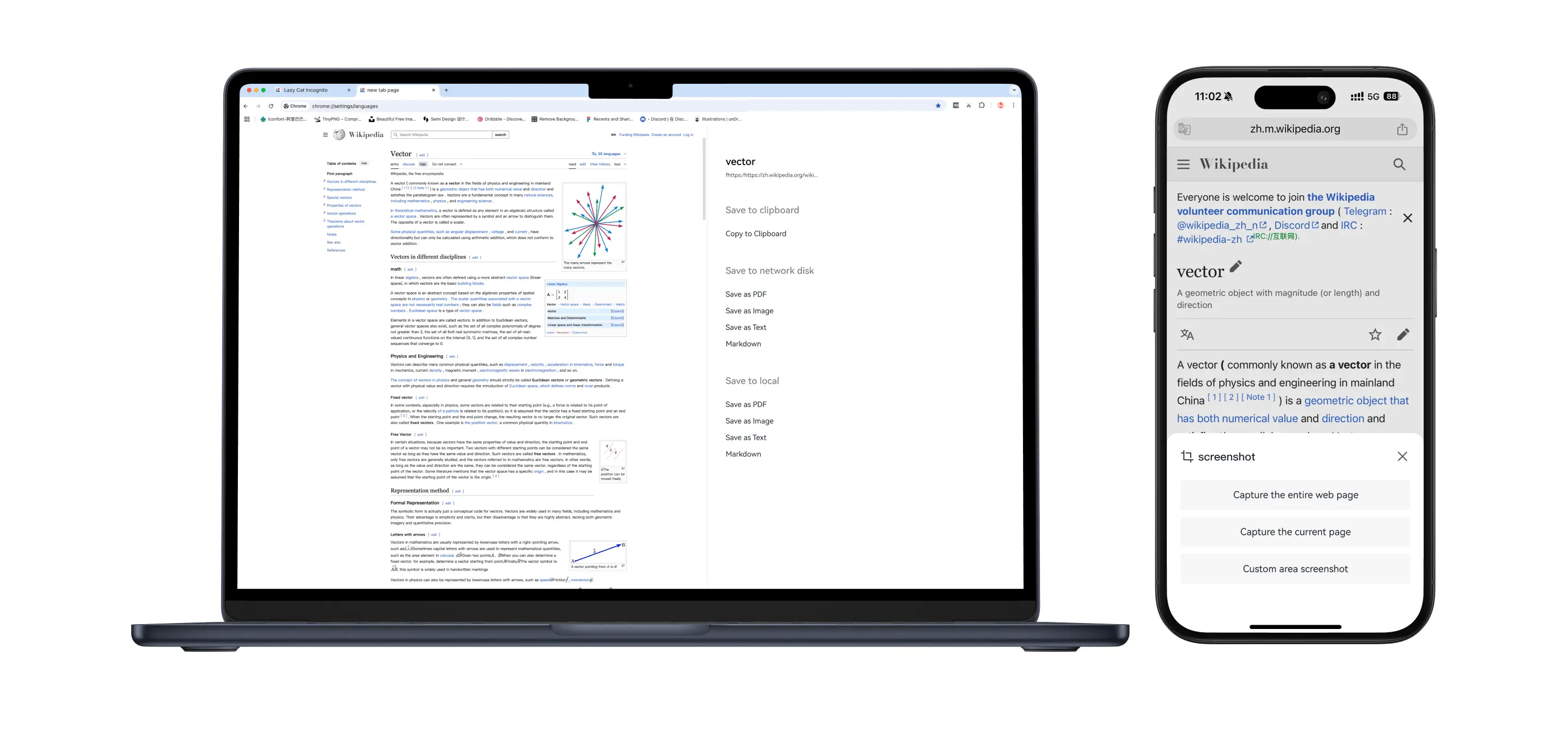

Web AI Screenshot

Screenshot any webpage to extract text, easily unlock website anti-copying restrictions

Web AI Screenshot

Screenshot any webpage to extract text

Easily unlock website anti-copying restrictions

Easily unlock website anti-copying restrictions

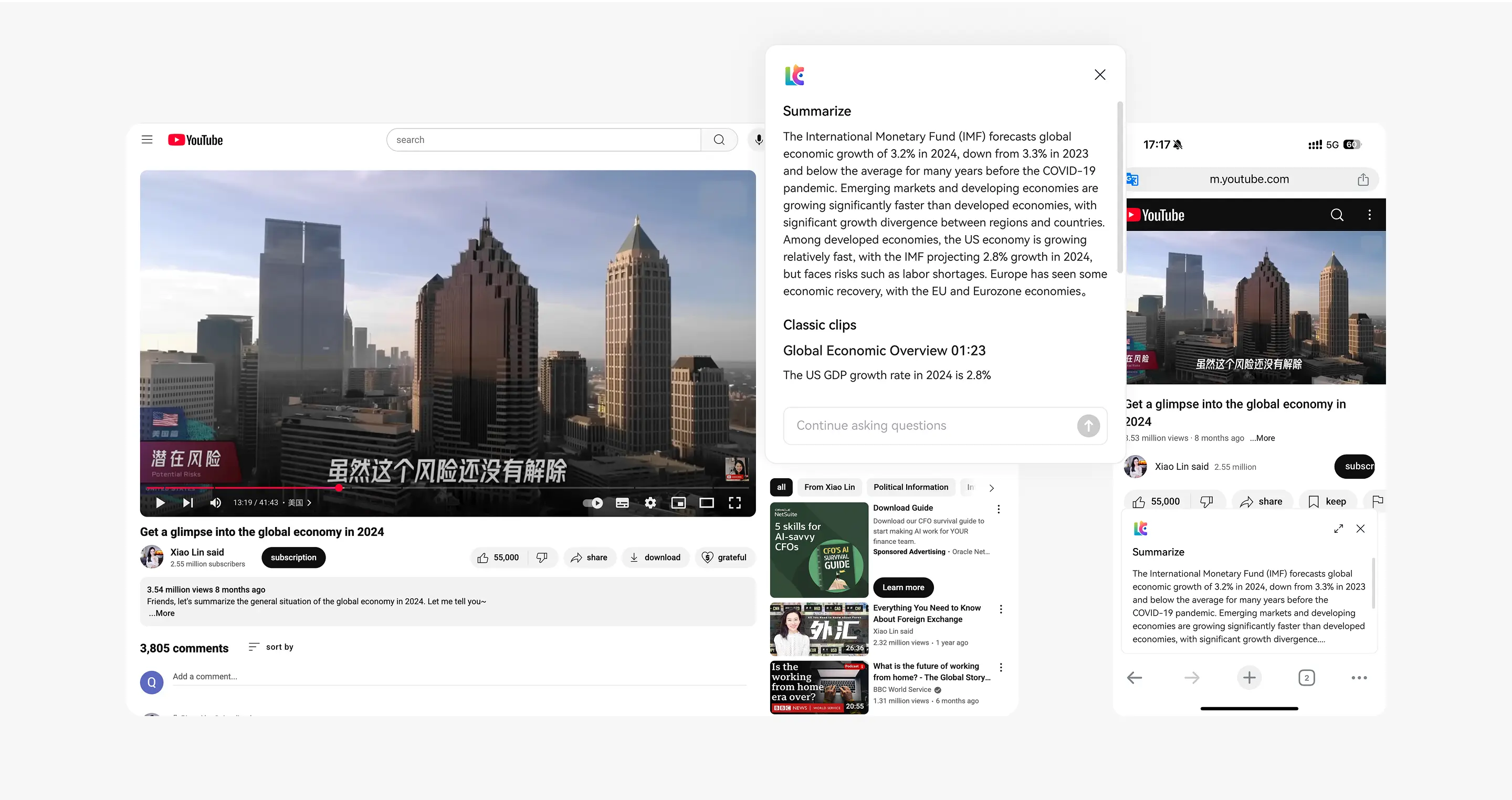

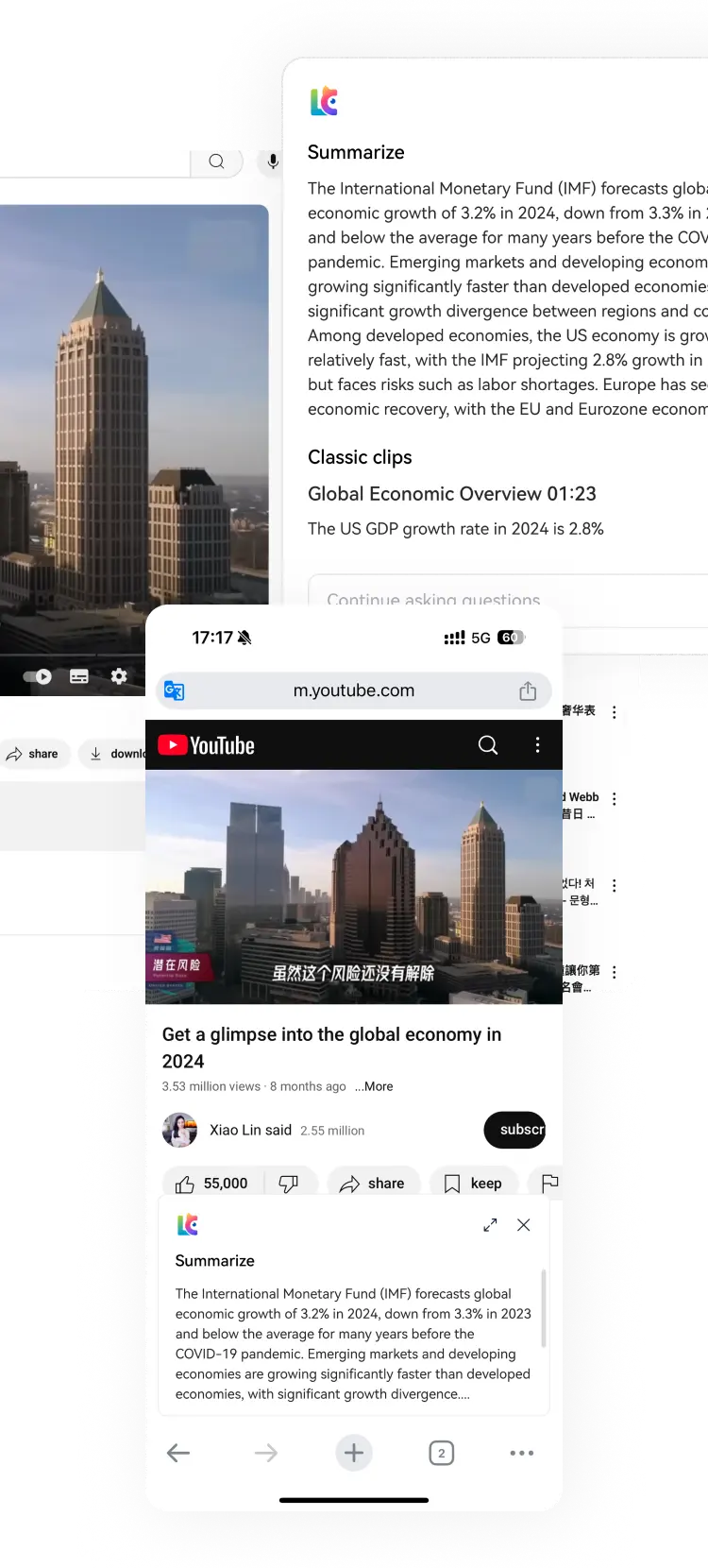

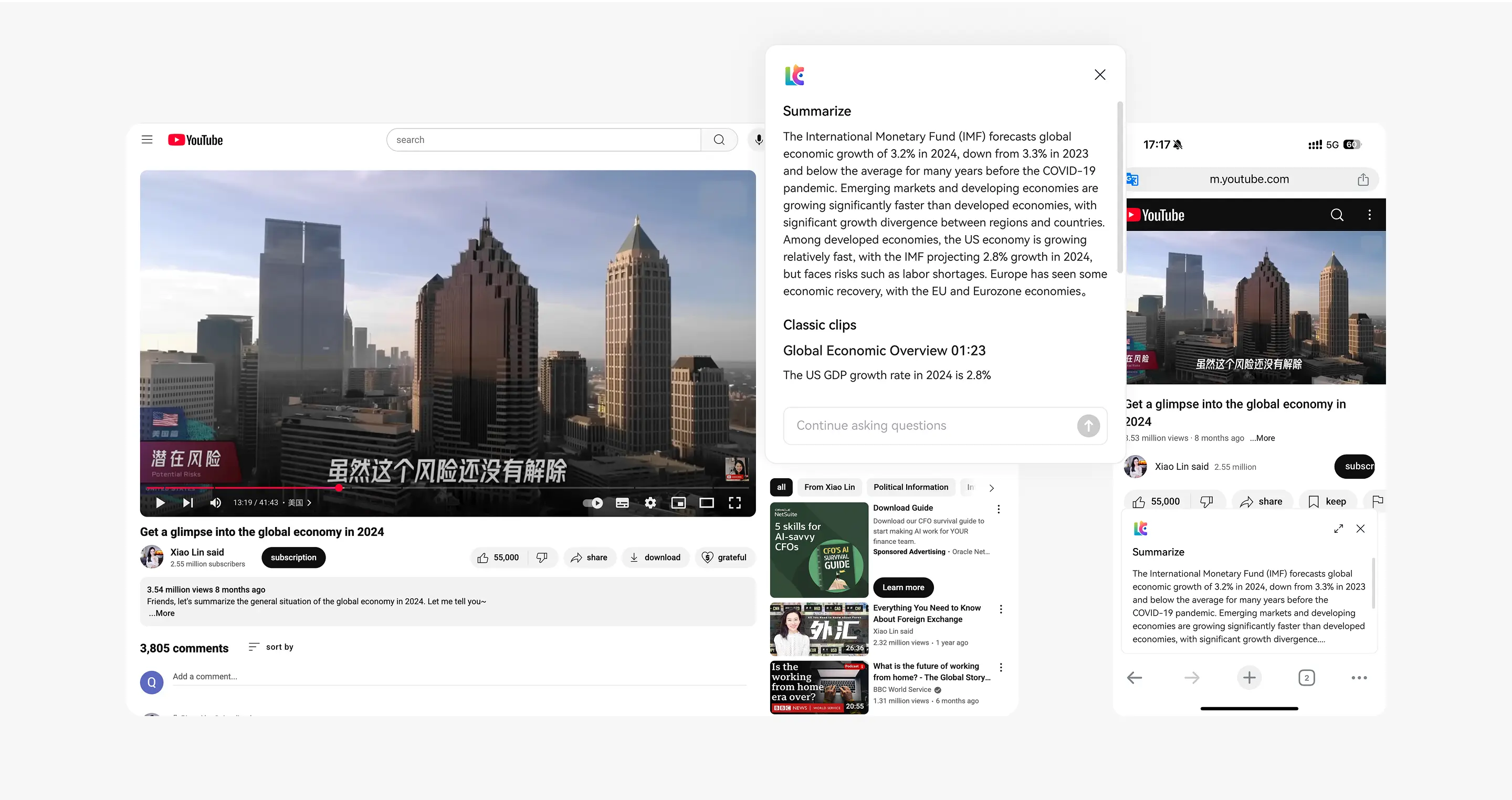

Video AI Summary

AI analyzes video content and delivers results in 10 seconds. Review the summary before watching the video—learn 100 times faster.

Video AI Summary

AI analyzes video content and delivers results in 10 seconds.

Review the summary before watching the video—learn 100 times faster.

Review the summary before watching the video—learn 100 times faster.

Webpage AI Reading

Turn any webpage into a podcast; learn while driving or on a business trip

Webpage AI Reading

Turn any webpage into a podcast; learn while driving or on a business trip

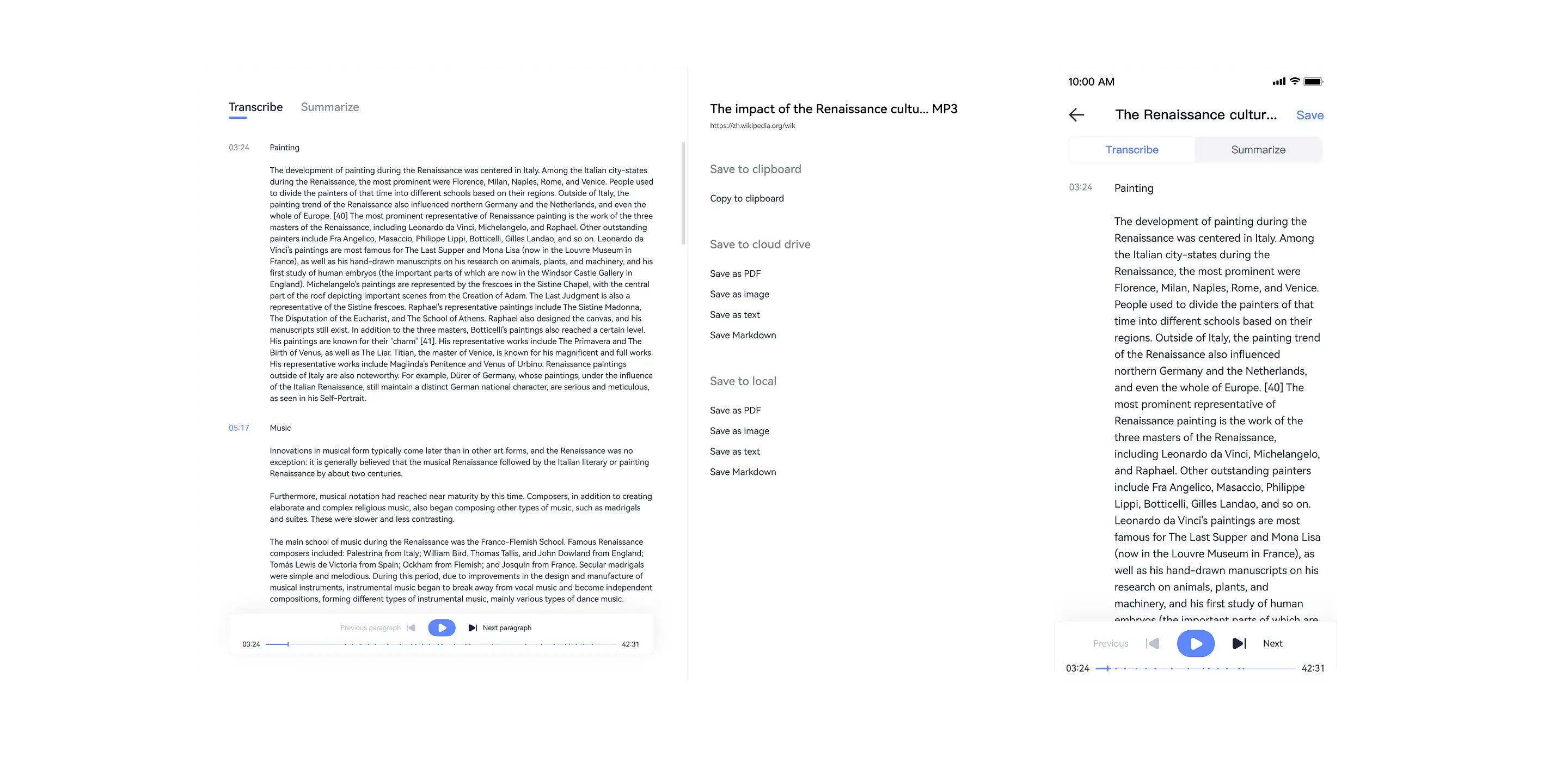

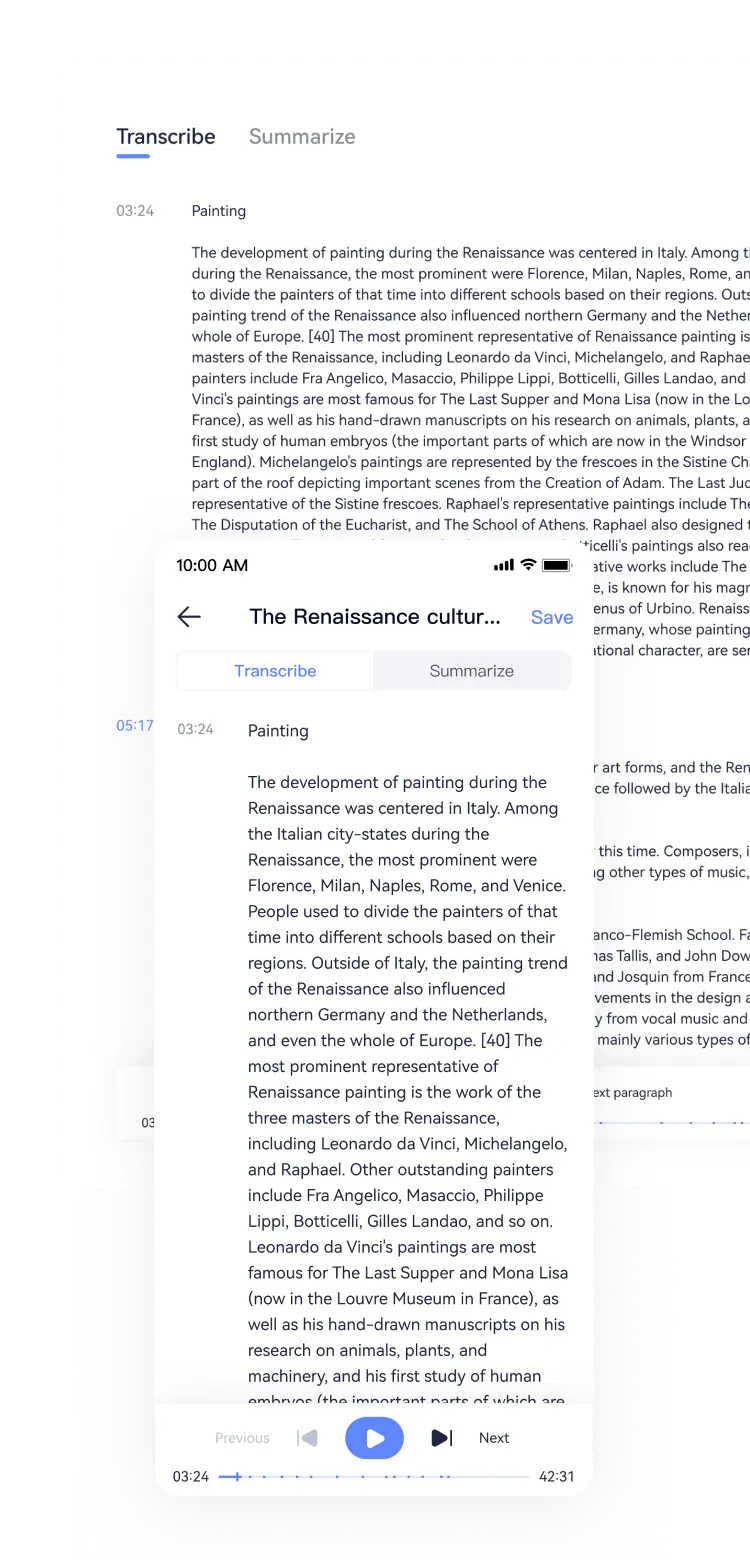

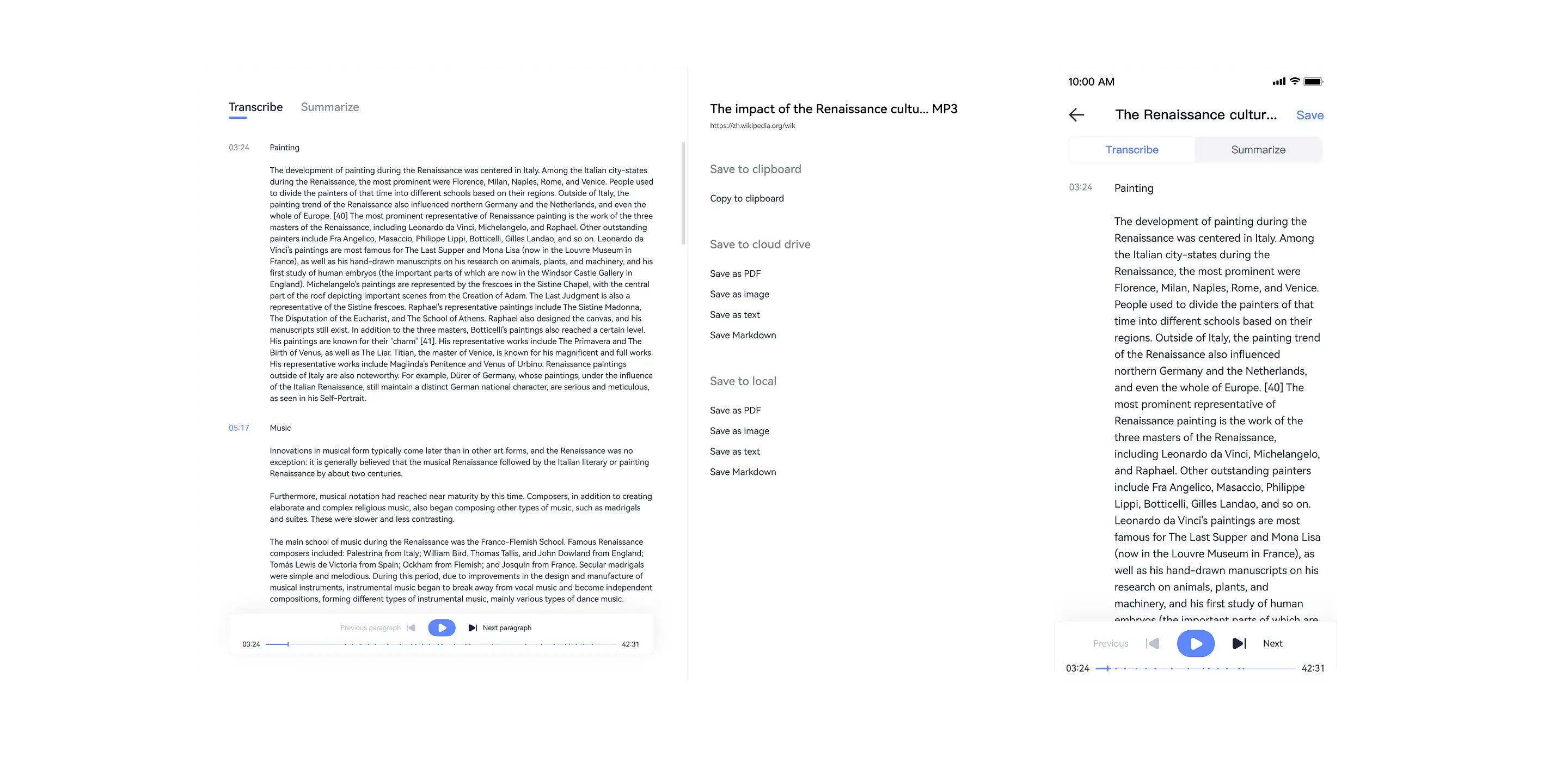

AI Audio-to-Text

Quickly transcribe and summarize audio content from platforms like Xiaoyuzhou and Himalaya FM. Learn 2 hours of audio in 5 minutes

AI Audio-to-Text

Quickly transcribe and summarize audio content from platforms like Xiaoyuzhou and Himalaya FM

Learn 2 hours of audio in 5 minutes

Learn 2 hours of audio in 5 minutes

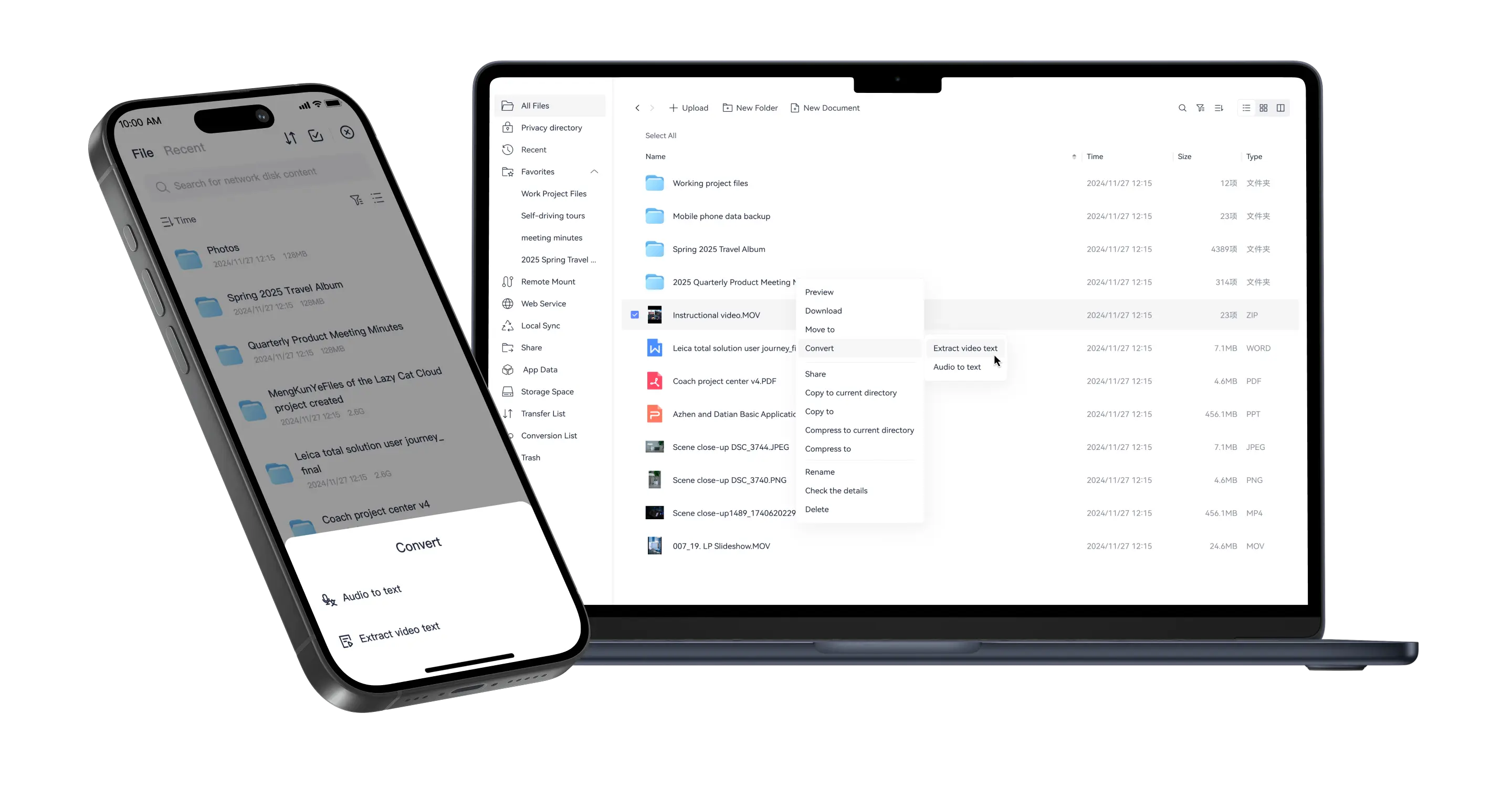

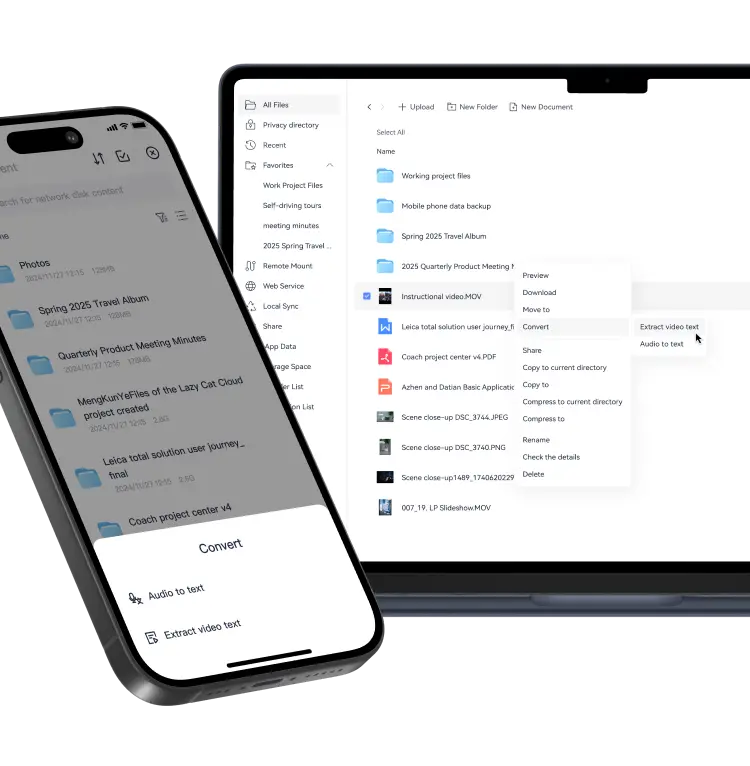

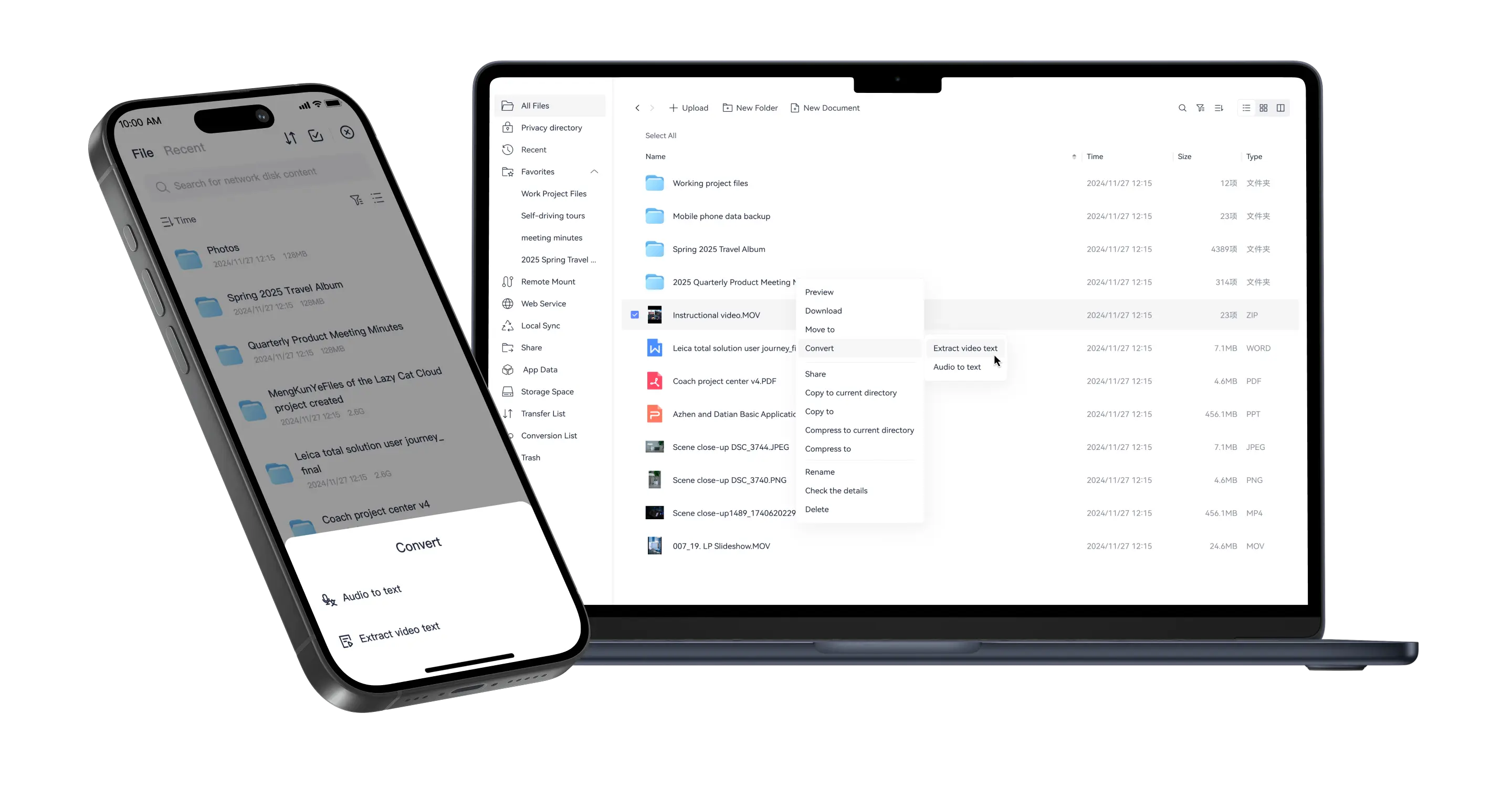

AI Video/Audio-to-Text

Quickly extract text from instructional videos/audio to accelerate lesson plan creation

AI Video/Audio-to-Text

Quickly extract text from instructional videos/audio to accelerate lesson plan creation

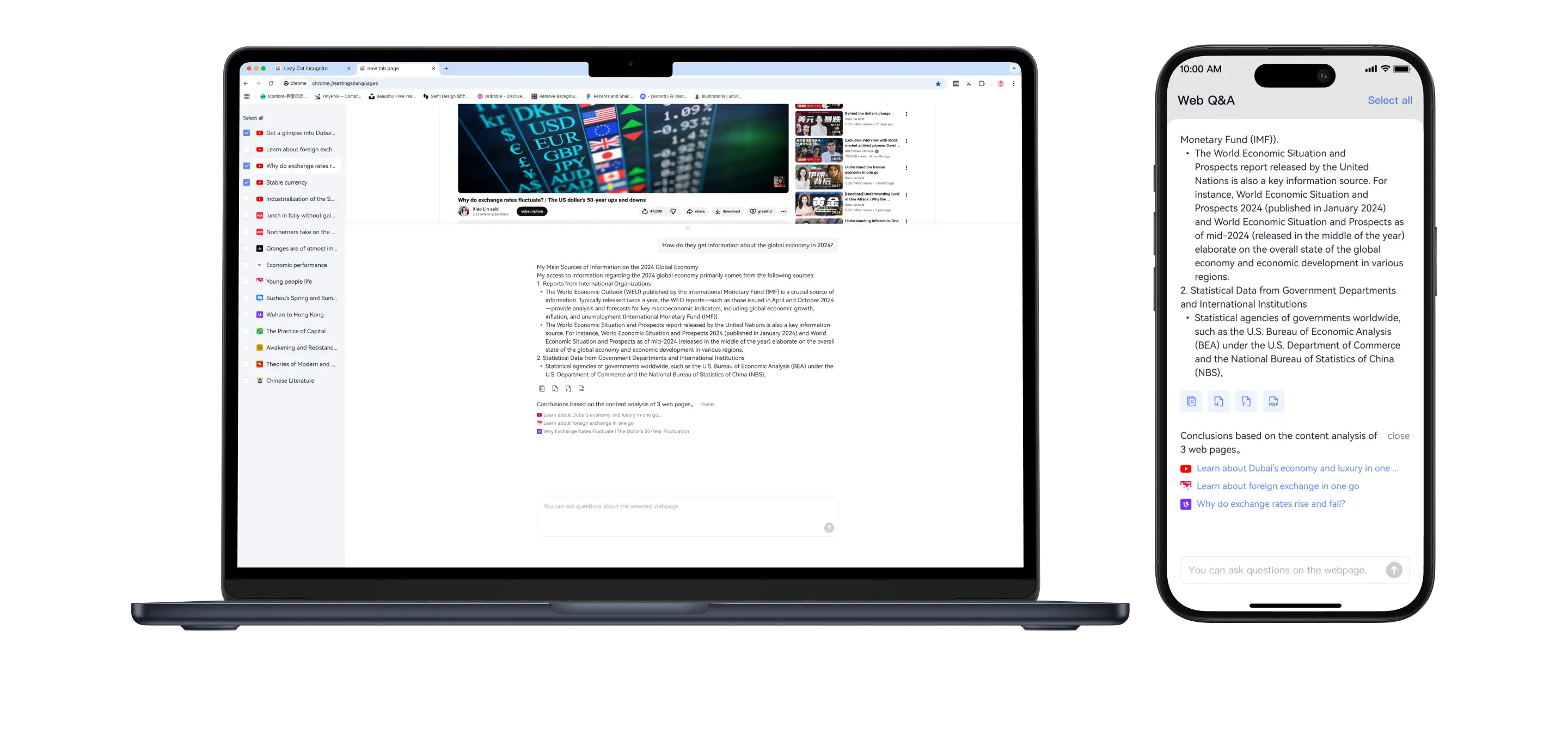

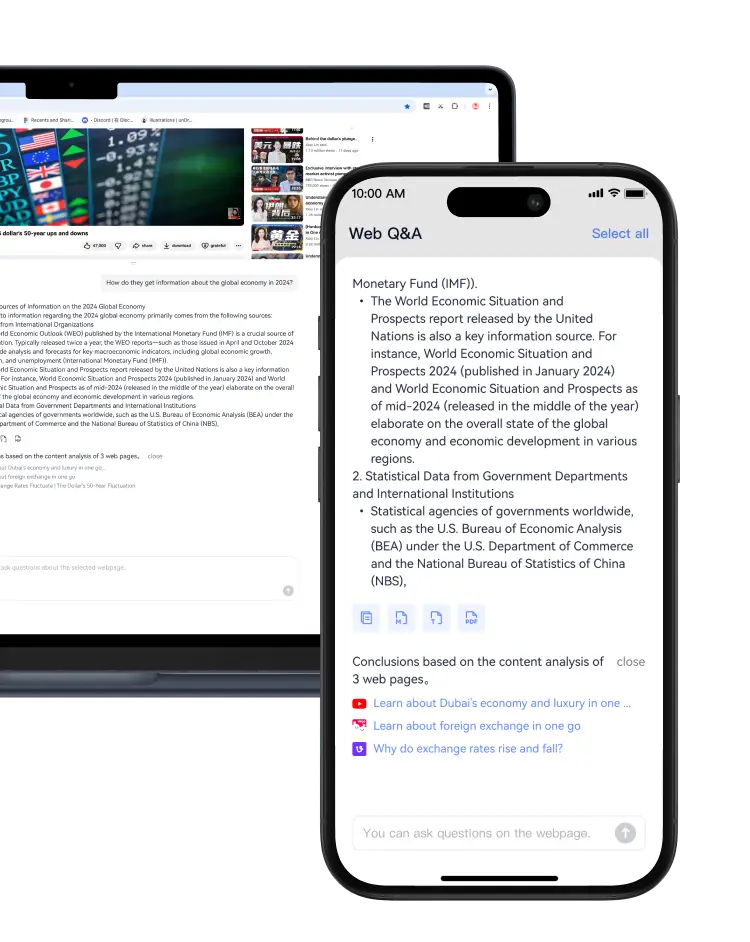

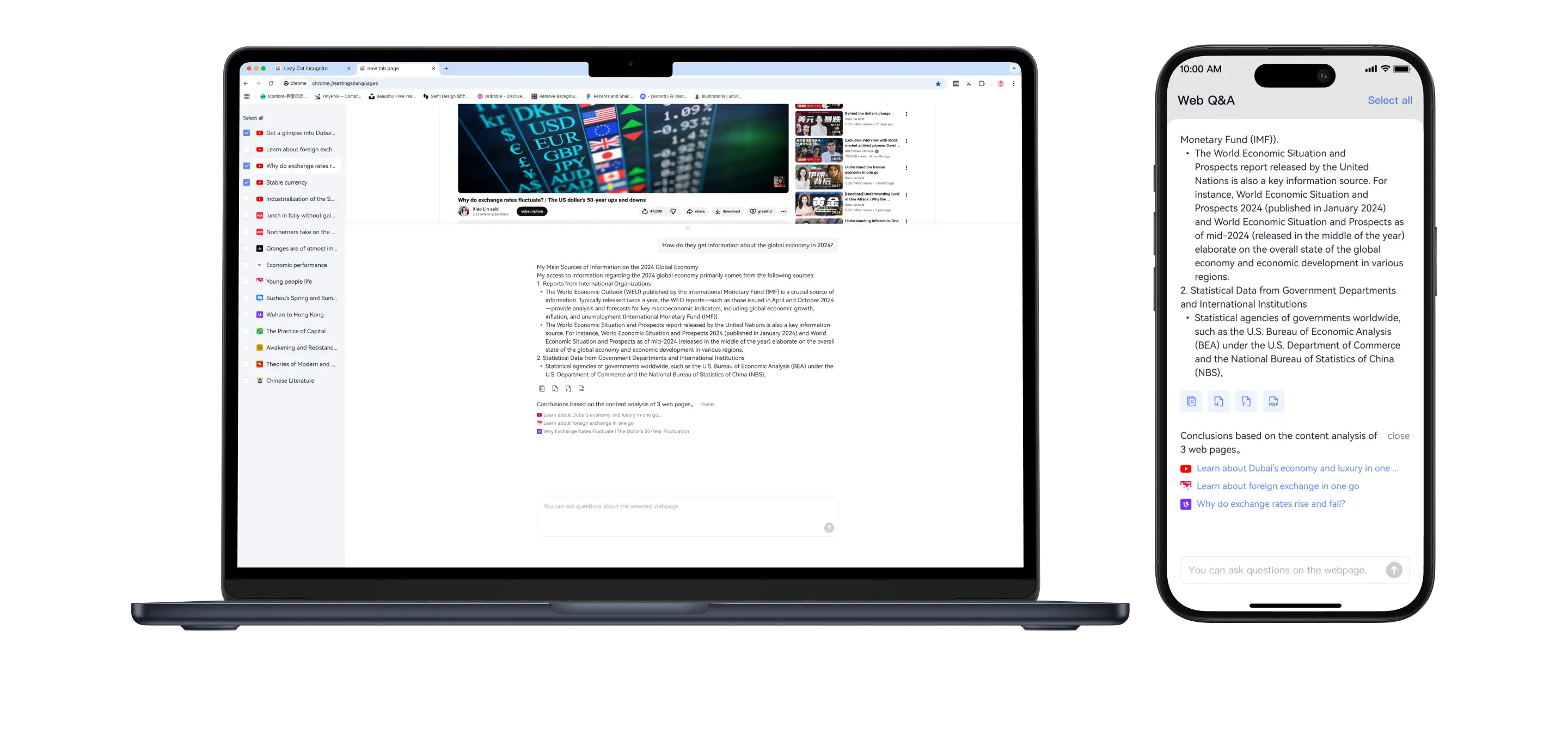

Web tags AI Q&A

AI automatically analyzes the content of web page tags, saving the time on manual data analysis

Web tags AI Q&A

AI automatically analyzes the content of web page tags, saving the time on manual data analysis

Image AI Translation

Easily translate foreign language text in pictures, a great helper for e-commerce shopping

Image AI Translation

Easily translate foreign language text in pictures, a great helper for e-commerce shopping

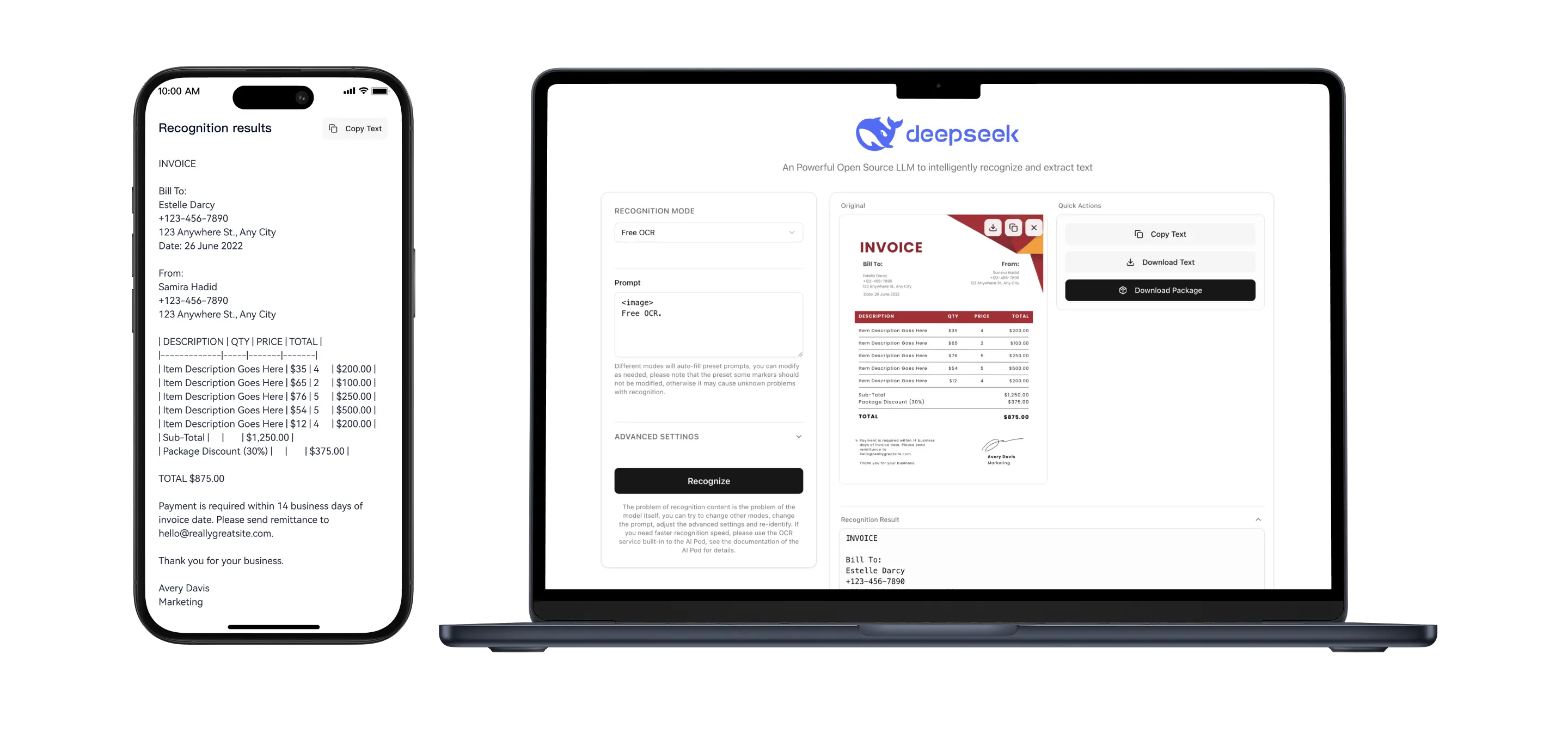

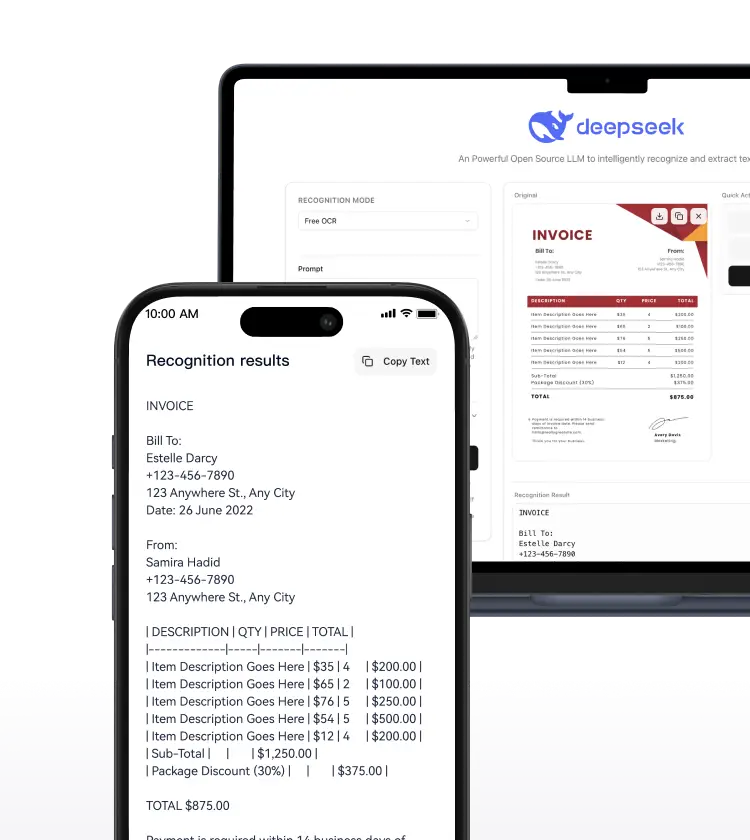

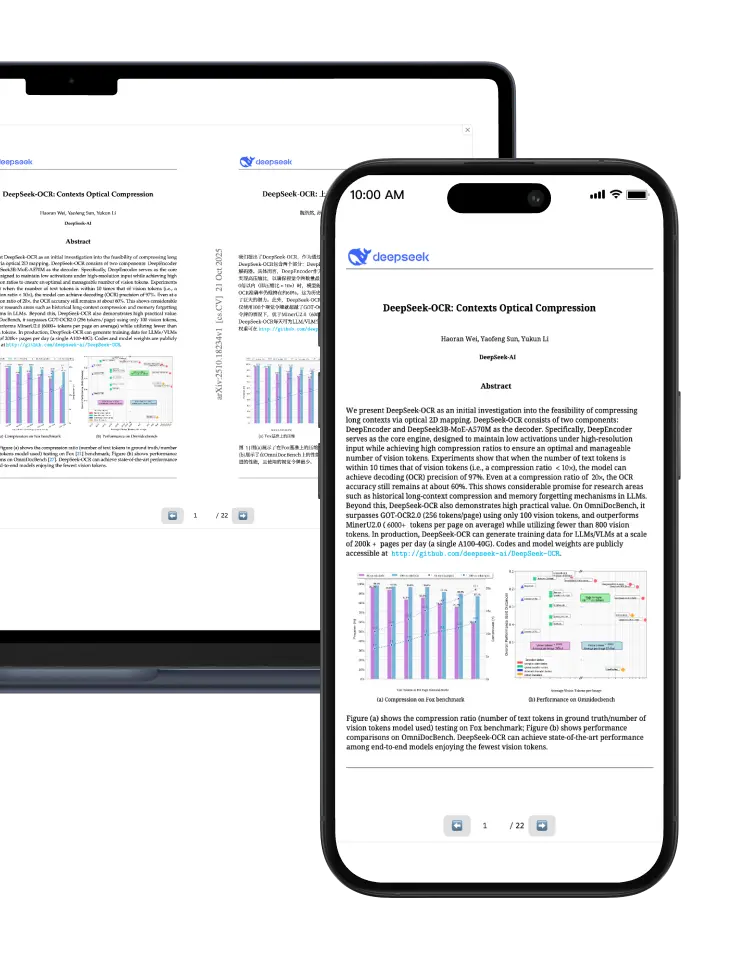

DeepSeek OCR

Intelligently recognizes and extracts text, supporting complex documents such as invoices and train tickets

DeepSeek OCR

Intelligently recognizes and extracts text, supporting complex documents such as invoices and train tickets

Document AI Translation

Multilingual text translation; supports uploading text documents for translation

Document AI Translation

Multilingual text translation; supports uploading text documents for translation

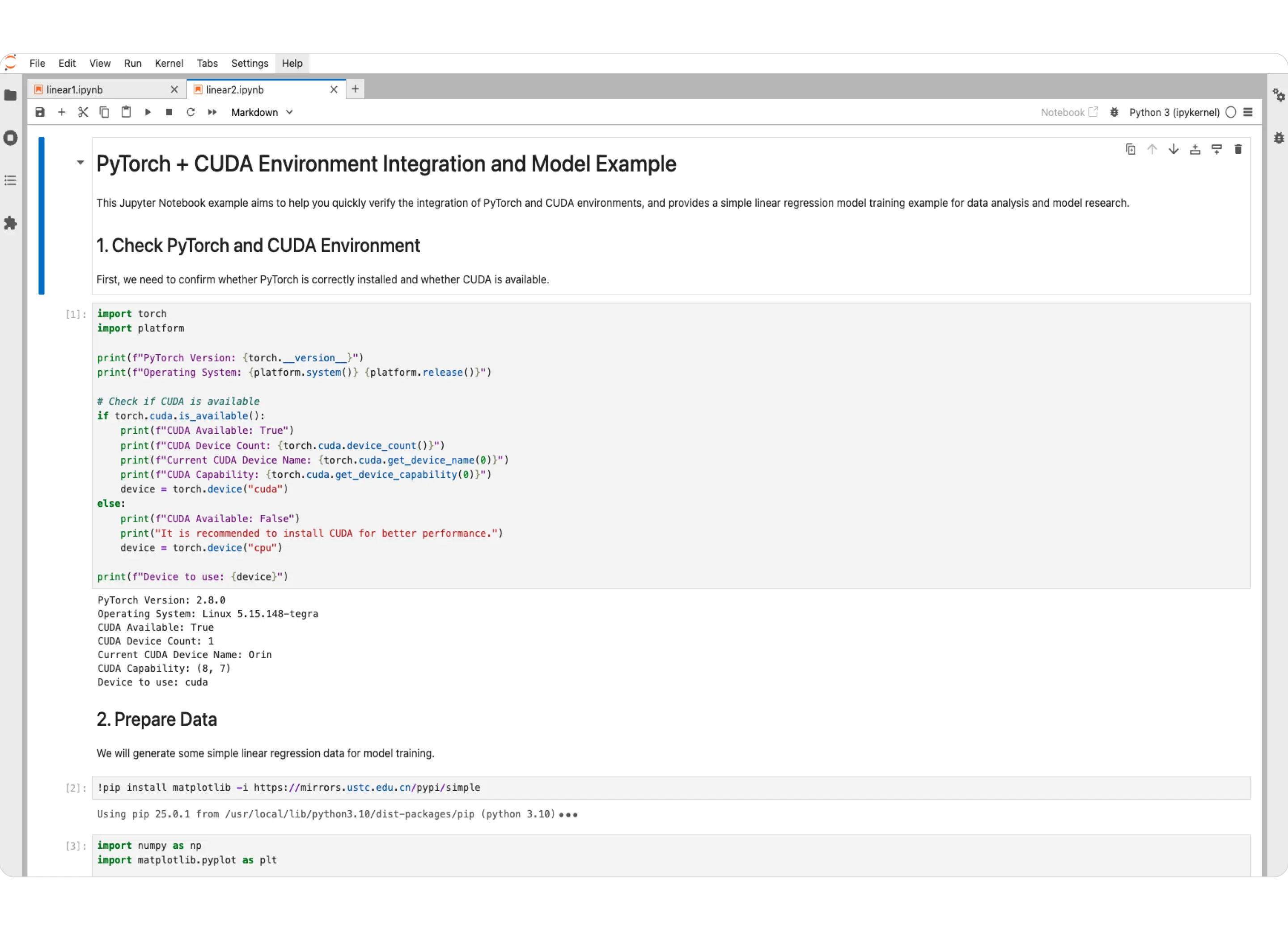

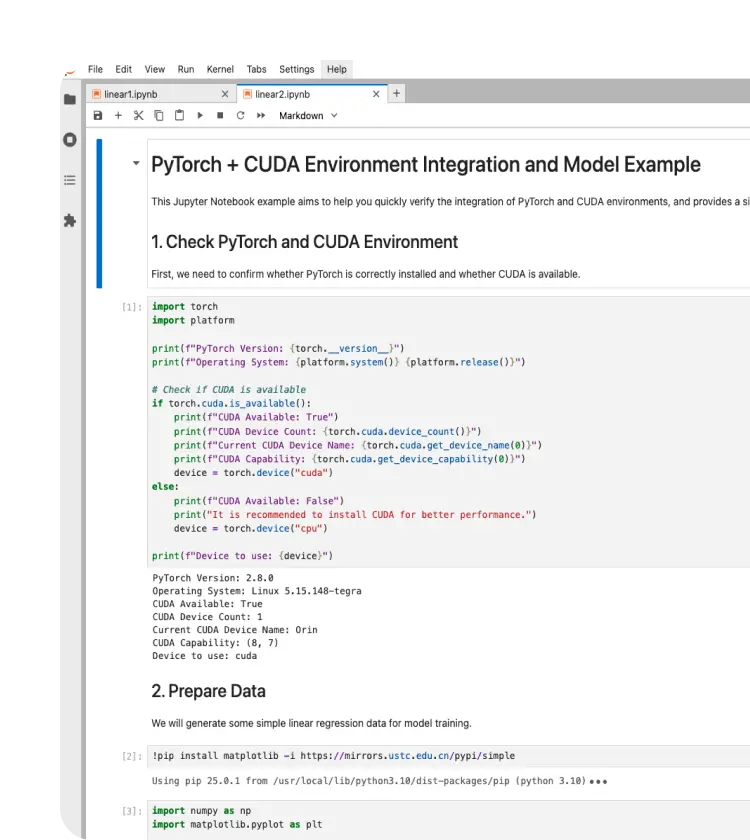

Jupyter Notebook

One-click installation and optimization of Jupyter, supports CUDA, a great helper for AI research

Jupyter Notebook

One-click installation and optimization of Jupyter, supports CUDA, a great helper for AI research

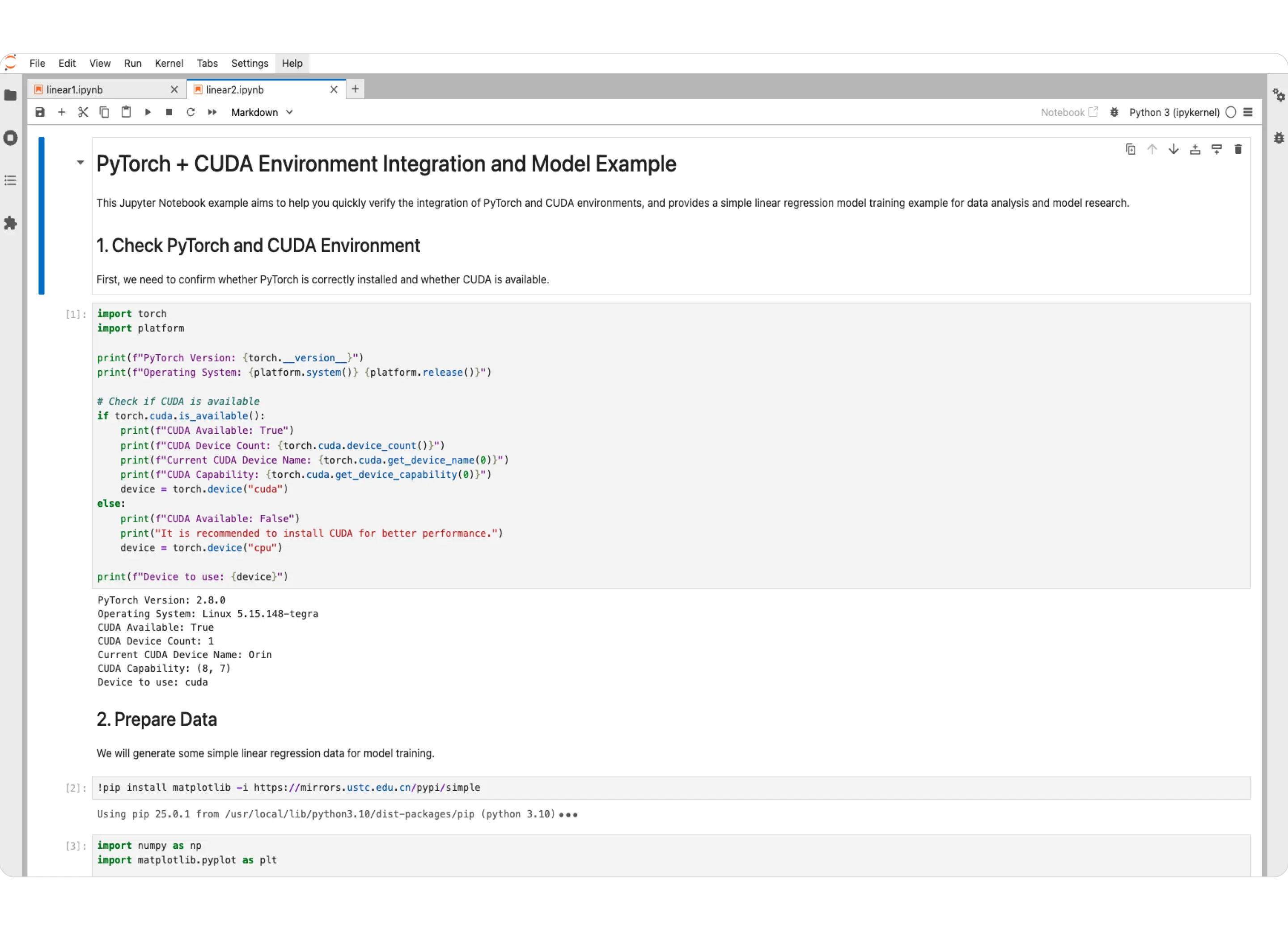

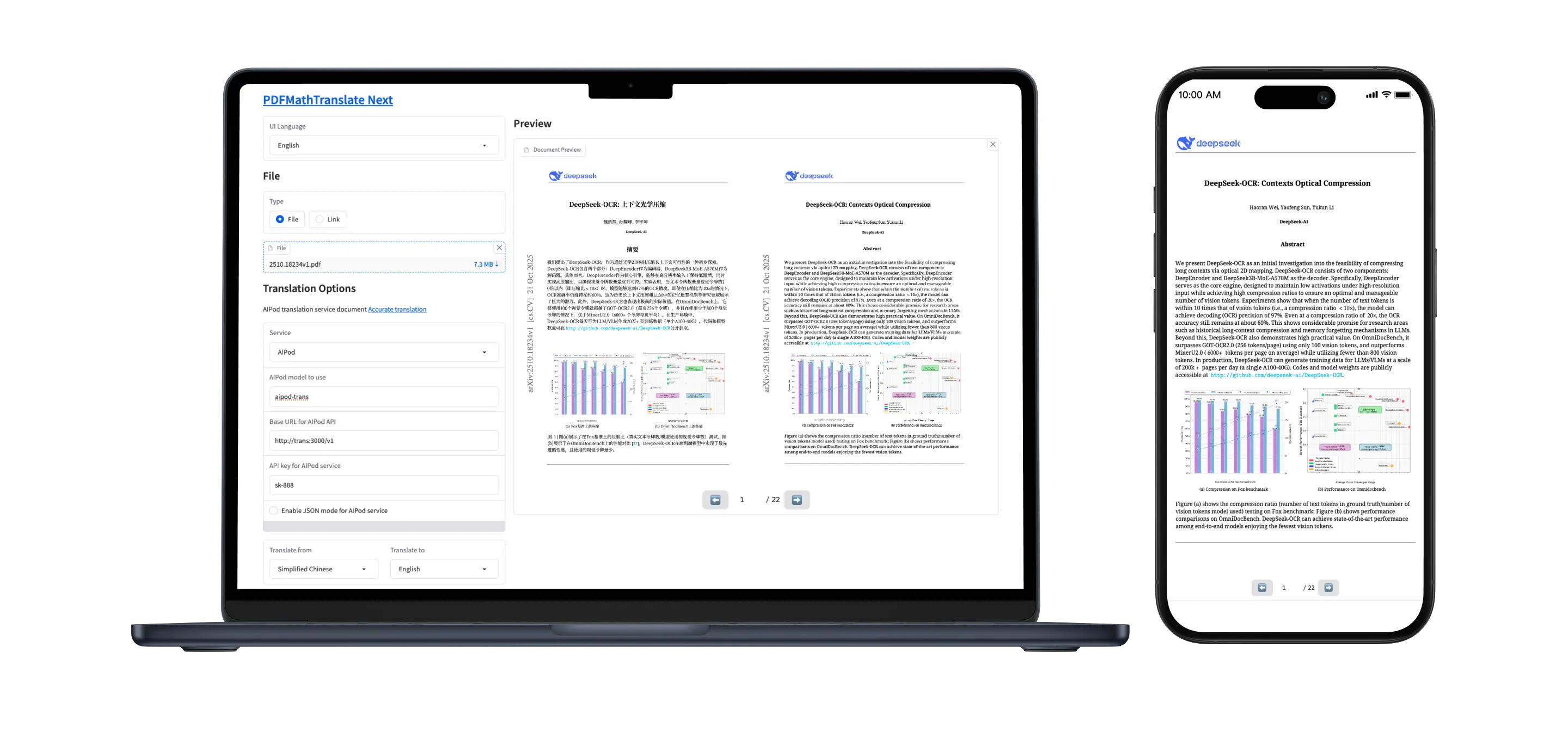

PDF/Document AI Translation

Upload and translate PDFs and various document formats, supporting multilingual translation

PDF/Document AI Translation

Upload and translate PDFs and various document formats, supporting multilingual translation

Ollama

Supports Ollama model download, mobile supercomputing, supports 70B ~ 671B AI large models

Ollama

Supports Ollama model download, mobile supercomputing, supports 70B ~ 671B AI large models

ComfyUI

Advanced text-to-image tools, supports Qwen-Image, Flux, StableDiffusion

ComfyUI

Advanced text-to-image tools, supports Qwen-Image, Flux, StableDiffusion

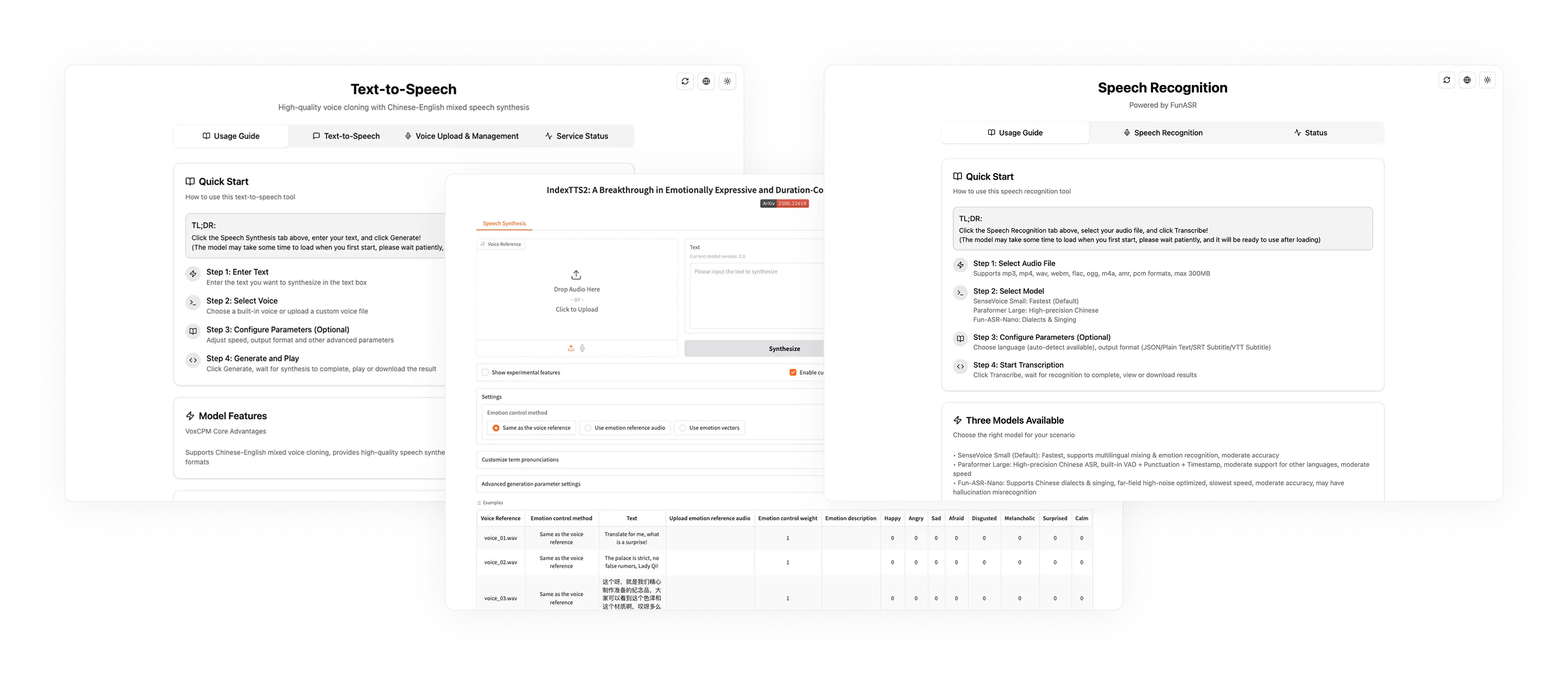

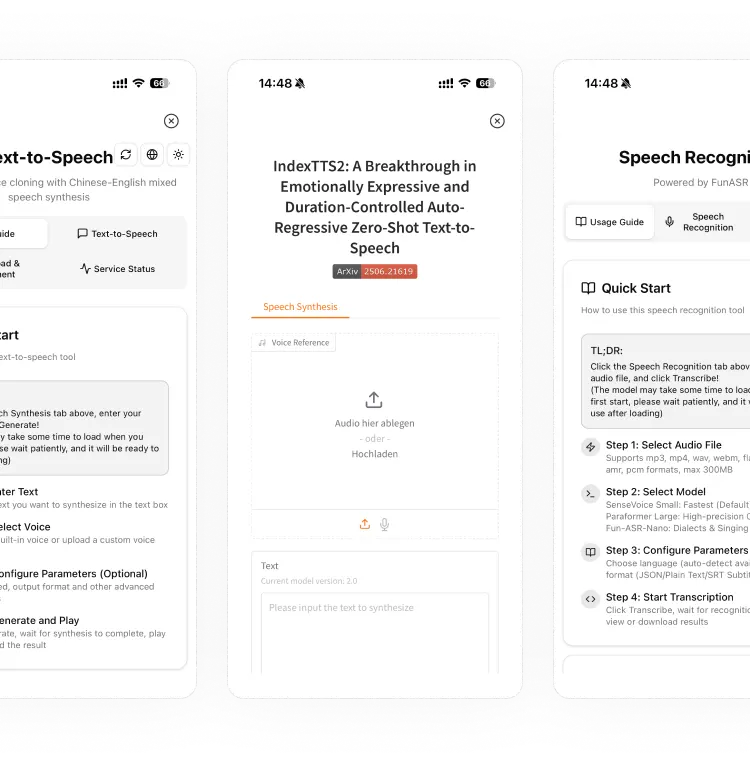

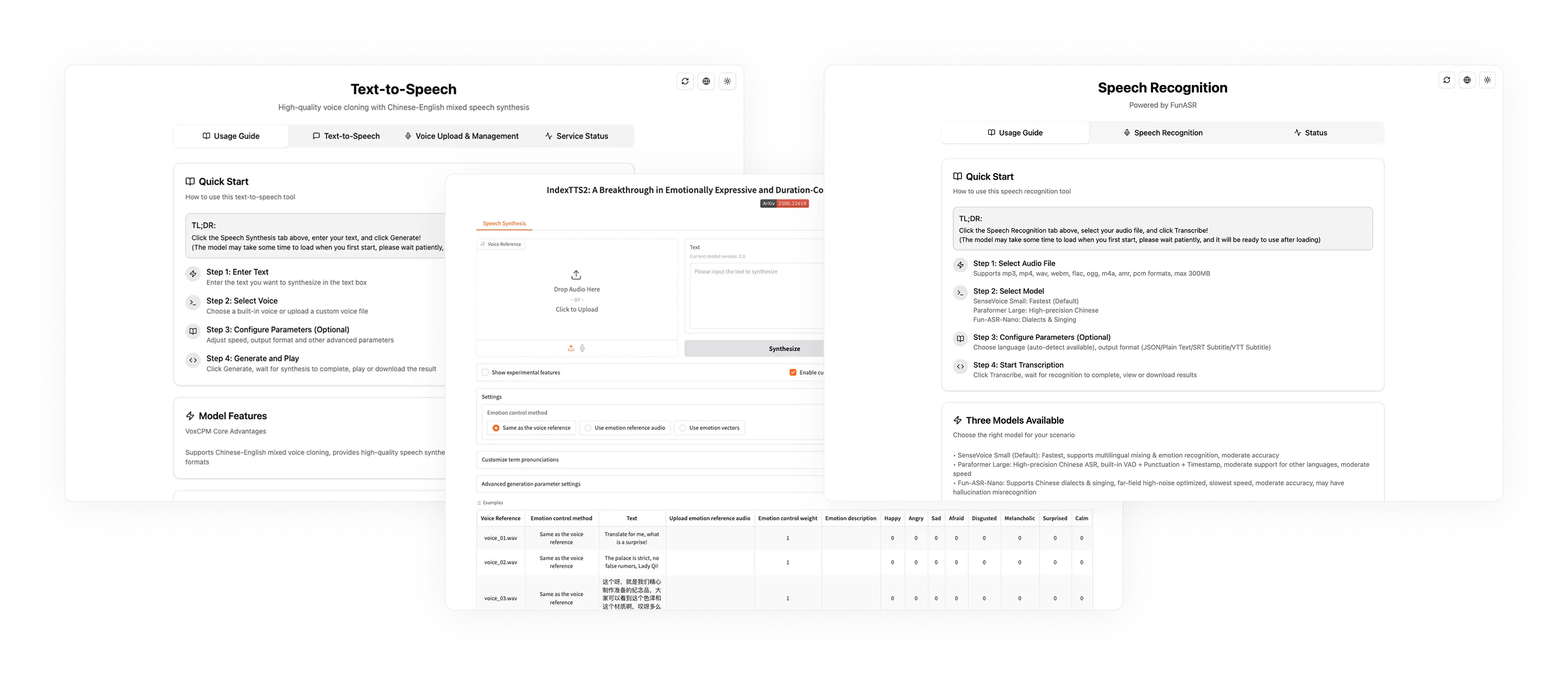

AI Speech Recognition and Synthesis

Integrates speech recognition, speech synthesis, and speech cloning, supporting AI models such as VoxCPM, IndexTTS, and FunASR

AI Speech Recognition and Synthesis

Integrates speech recognition, speech synthesis, and speech cloning, supporting AI models such as VoxCPM, IndexTTS, and FunASR

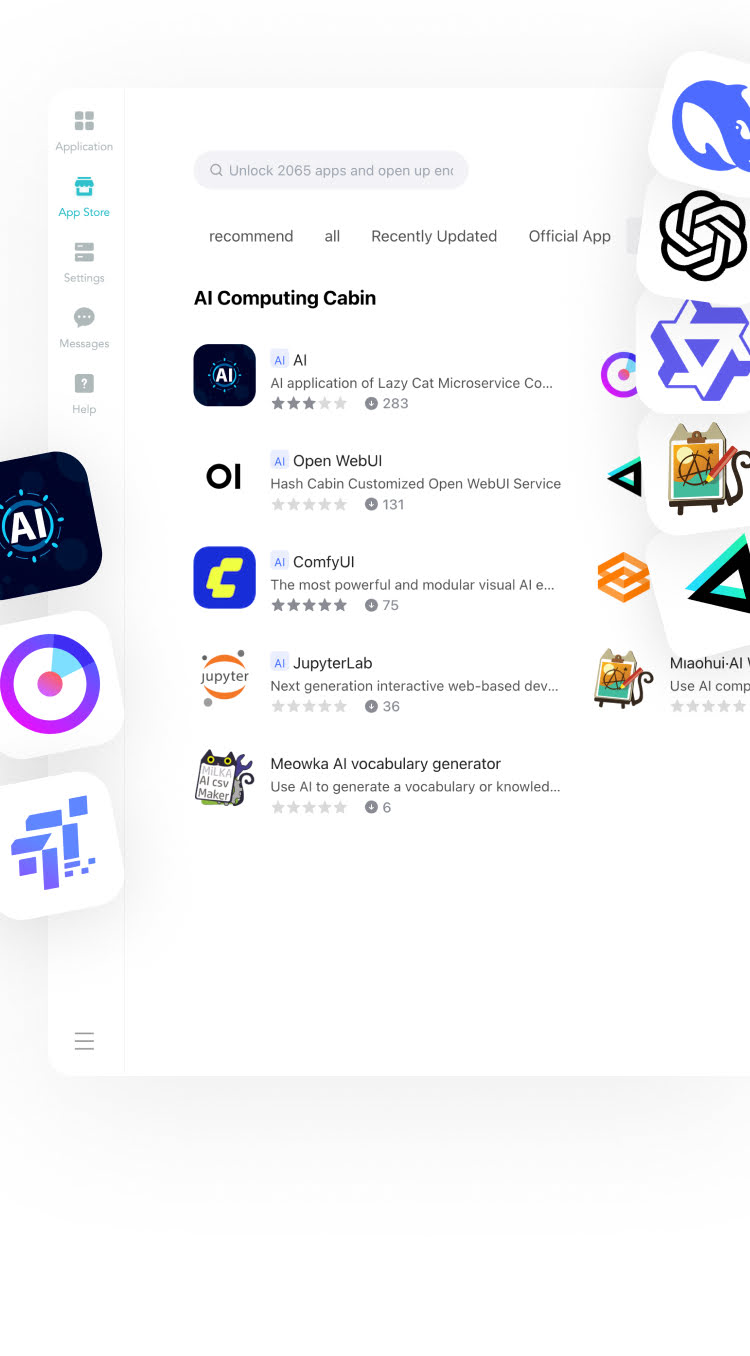

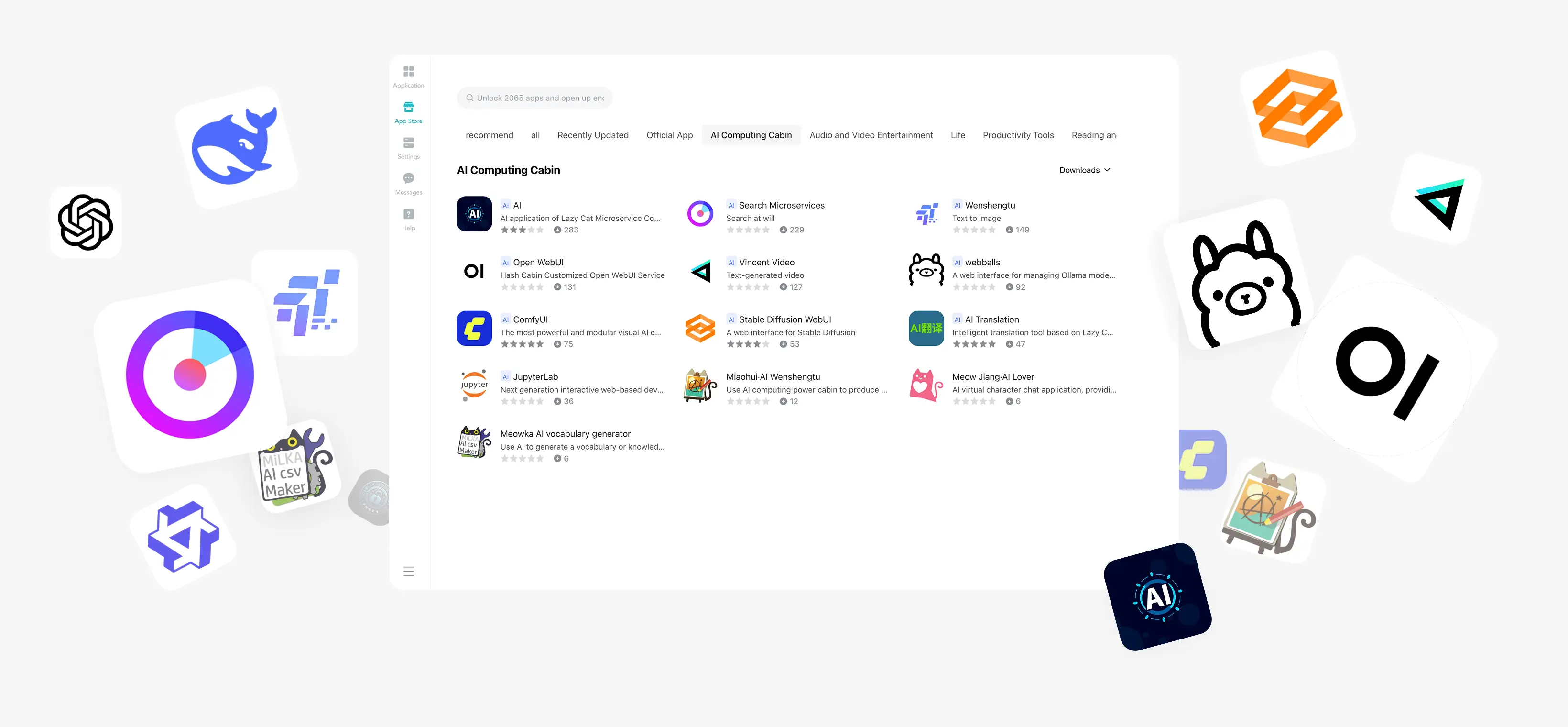

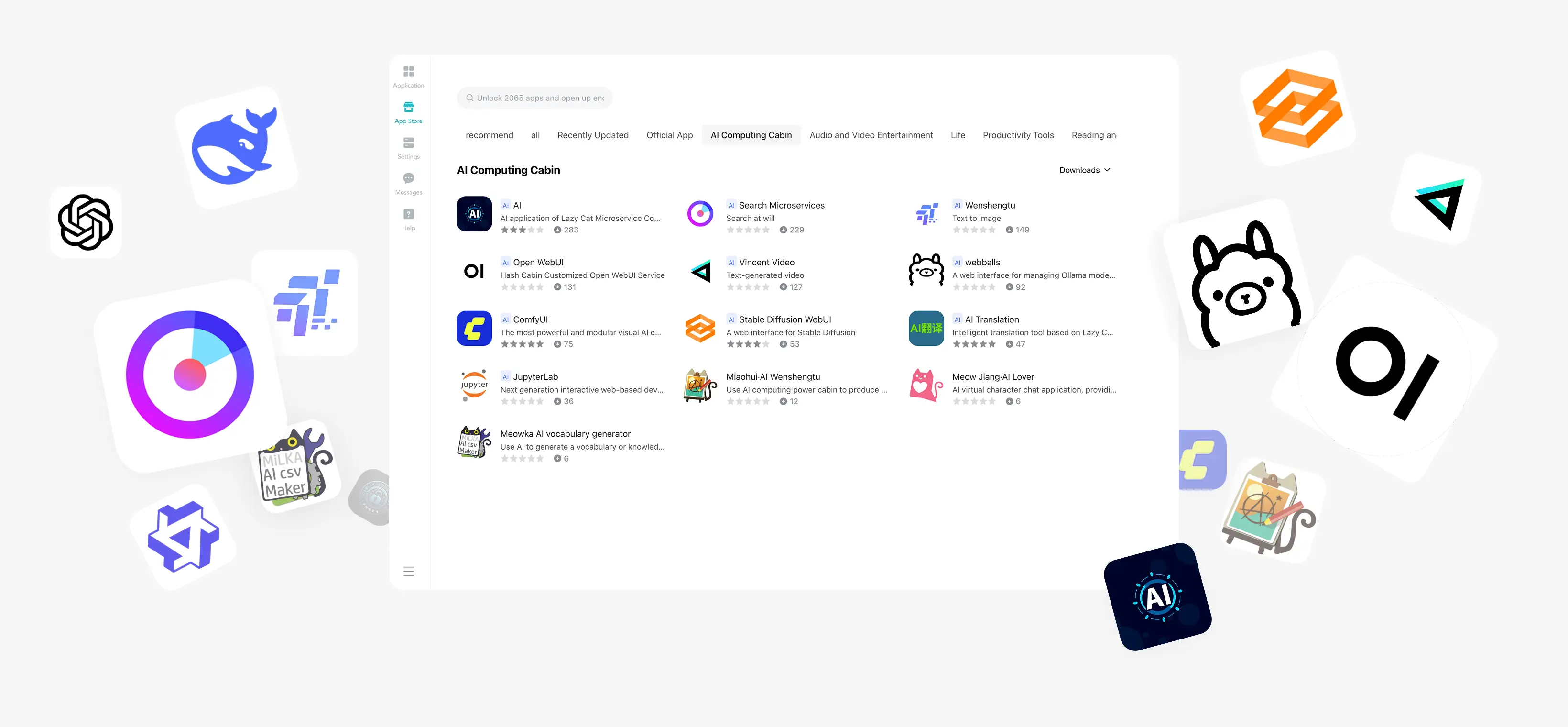

LCMD AI Pod App Store

Local deployment of AI applications, unlimited Tokens, privacy and security

LCMD AI Pod App Store

Local deployment of AI applications, unlimited Tokens, privacy and security

Frequently Asked Questions

What are the differences between the LCMD AI Pod and a desktop GPU solution?

① The AI Pod has 64GB VRAM, while consumer-grade graphics cards have a maximum 32GB VRAM. 64GB VRAM can run models as large as 70B ~ 671B.

② The AI Pod can be used as a standalone computer, at only 1/3 the price of a desktop computer with the equivalent GPU capability.

③ The AI Pod is networked via a LAN, allowing for unlimited computing power. Traditional GPUs can only accommodate four per machine.

④ The AI Pod is more power-efficient, consuming only 60W, while consumer-grade graphics cards consume 350W to 450W.

⑤ The AI Pod is focused on AI inference learning and is quieter, but it cannot handle gaming like consumer-grade graphics cards.

What are the differences between the LCMD AI Pod and AMD 395?

① The AI Pod uses NVIDIA chips with 275T of actual computing power (no exaggeration). AMD's theoretical value is 120T, but its actual performance is discounted by 50%.

② The AI Pod is compatible with the CUDA ecosystem. Any AI model can be downloaded and used directly. Many AMD models are not compatible.

③ The AI Pod comes with AI software integrated with the MicroServer, offering out-of-the-box functionality. AMD requires additional setup for AI software.

What are the advantages of purchasing the LCMD AI Pod + LCMD MicroServer bundle?

① The LCMD MicroServer offers more self-developed AI applications. No need to tinker with the AI development environment. Ready to use right out of the box.

② The LCMD MicroServer provides internal network penetration capability, allowing users to access the AI Pod from their mobile phone even when outside.

③ The LCMD MicroServer offers a personal knowledge base App, making AI analysis of data at home safer.

How to use the LCMD AI Pod if purchased separately?

① The LCMD AI Pod is a standalone Ubuntu AI computer that can be used by plugging in a mouse, keyboard, and monitor.

Frequently Asked Questions

What are the differences between the LCMD AI Pod and a desktop GPU solution?

① The AI Pod has 64GB VRAM, while consumer-grade graphics cards have a maximum 32GB VRAM. 64GB VRAM can run models as large as 70B ~ 671B.

② The AI Pod can be used as a standalone computer, at only 1/3 the price of a desktop computer with the equivalent GPU capability.

③ The AI Pod is networked via a LAN, allowing for unlimited computing power. Traditional GPUs can only accommodate four per machine.

④ The AI Pod is more power-efficient, consuming only 60W, while consumer-grade graphics cards consume 350W to 450W.

⑤ The AI Pod is focused on AI inference learning and is quieter, but it cannot handle gaming like consumer-grade graphics cards.

What are the differences between the LCMD AI Pod and AMD 395?

① The AI Pod uses NVIDIA chips with 275T of actual computing power (no exaggeration). AMD's theoretical value is 120T, but its actual performance is discounted by 50%.

② The AI Pod is compatible with the CUDA ecosystem. Any AI model can be downloaded and used directly. Many AMD models are not compatible.

③ The AI Pod comes with AI software integrated with the MicroServer, offering out-of-the-box functionality. AMD requires additional setup for AI software.

What are the advantages of purchasing the LCMD AI Pod + LCMD MicroServer bundle?

① The LCMD MicroServer offers more self-developed AI applications. No need to tinker with the AI development environment. Ready to use right out of the box.

② The LCMD MicroServer provides internal network penetration capability, allowing users to access the AI Pod from their mobile phone even when outside.

③ The LCMD MicroServer offers a personal knowledge base App, making AI analysis of data at home safer.

How to use the LCMD AI Pod if purchased separately?

① The LCMD AI Pod is a standalone Ubuntu AI computer that can be used by plugging in a mouse, keyboard, and monitor.

The LCMD AI Pod

Space White

Sandblasting and baking paint process

Star Gray

Anodizing process

Basic Information

Model

X3

Certified Model

LC2379

Computing Power

275 TOPS

VRAM

64GB

OS

Ubuntu

Dimensions

144 x 130 x 61 mm(L x W x H)

Weight

2.3 kg

GPU

Parameters

Nvidia Jetson Orin 64GB | 64 Tensor Core 2048-Core | NVIDIA Ampere architecture | Freq 1.3 GHz

VRAM

Parameters

64GB 256-bit LPDDR5 | 204.8GB/s

CPU

Parameters

12-core Arm® Cortex®-A78AE v8.2 | 64-bit CPU 3MB L2 + 6MB L3 | 2.2 GHz

Storage

Model Storage

2 x NVMe M.2 | Max 32 TB | PCIE4.0 X4 | Theoretical Speed 7000MB/s | 2280

System Storage

1 x eMMC 5.1 | 64GB

Wired LAN Ports

2.5 Gb

RealTek RJ45 High-Speed Port | 2.5GbE Ethernet Interface | Compatible with 10/100/1000/2500 Mbps | Supports Wake-on-LAN

10 Gb

Marvell RJ45 High-Speed Port | 10GbE Ethernet Interface | Compatible with 10/100/1000/2500/5000/10000 Mbps | Supports Wake-on-LAN

Communication

Wi-Fi

Haihua WIFI6 XB560NF Wireless Network Card | Dual Patch Antennas

Bluetooth

Bluetooth 5.4

Connection Ports

TypeC

1 x TypeC 3.2 Gen2 | 10Gbps

USB

2 x USB 3.2 Gen1 | 5Gbps

Wired LAN

1 x 2.5GbE, 1 个 10GbE

HDMI

1 x HDMI 2.1 8K

Power

DC 5525 x1

Cooling System

Direct Blow Hydraulic Silent Fan

10mm Large Fan | Silent FDB Bearing Speed 3500±10% RPM | With Self-developed Silent Speed, Voltage12V

Power Supply

Gallium nitride wide-voltage adapter

Specification 5.5x2.5mm | Input 100V ~ 240V | Output 12V/8A | Freq 50/60Hz | Supports UPS

The LCMD AI Pod

Space White

Sandblasting and baking paint process

Star Gray

Anodizing process

Basic Information

Model

X3

Certified Model

LC2379

Computing Power

275 TOPS

VRAM

64GB

OS

Ubuntu

Dimensions

144 x 130 x 61 mm(L x W x H)

Weight

2.3 kg

GPU

Parameters

Nvidia Jetson Orin 64GB

64 Tensor Core 2048-Core

NVIDIA Ampere architecture

Freq 1.3 GHz

VRAM

Parameters

64GB 256-bit LPDDR5

Memory Bandwidth 204.8GB/s

CPU

Parameters

12-core Arm® Cortex®-A78AE v8.2

64-bit CPU 3MB L2 + 6MB L3

Freq 2.2 GHz

Storage

Model Storage

2 x NVMe M.2

Max 32 TB

PCIE4.0 X4

Theoretical Speed 7000MB/s

Dimensions 2280

System Storage

1 x eMMC 5.1

64GB

Wired LAN Ports

2.5 Gb

RealTek RJ45 High-Speed Port

2.5GbE Ethernet Interface

Compatible with 10/100/1000/2500 Mbps

Supports Wake-on-LAN

10 Gb

Marvell RJ45 High-Speed Port

10GbE Ethernet Interface

Compatible with 10/100/1000/2500/5000/10000 Mbps

Supports Wake-on-LAN

Communication

Wi-Fi

Haihua WIFI6 XB560NF Wireless Network Card

Dual Patch Antennas

Bluetooth

Bluetooth 5.4

Connection Ports

TypeC

1 x TypeC 3.2 Gen2

Rate 10Gbps

USB

2 x USB 3.2 Gen1

Rate 5Gbps

Wired Network Port

1 x 2.5GbE

1 个 10GbE

HDMI

1 x HDMI 2.1

8K High-definition Output

Power

1 x DC

Specification 55x2.5mm

Cooling System

Direct Blow Hydraulic Silent Fan

10mm Large Fan

Silent FDB Bearing Speed 3500±10% RPM

With Self-developed Silent Speed

Voltage 12V

Power Supply

Gallium nitride wide-voltage adapter

Specification 5.5x2.5mm

Input 100V ~ 240V

Output 12V/8A

Freq 50/60Hz

Supports UPS

The LCMD AI Pod, Star Wars Sci-Fi

Only for You

Only for You

① Handbag

② Main Unit

③ Gallium Nitride Adapter、Gigabit Network Cable

④ Packaging Box

The LCMD AI Pod, Star Wars Sci-Fi, Only for You.

① Handbag

② Main Unit

③ Gallium Nitride Adapter、Gigabit Network Cable

④ Packaging Box